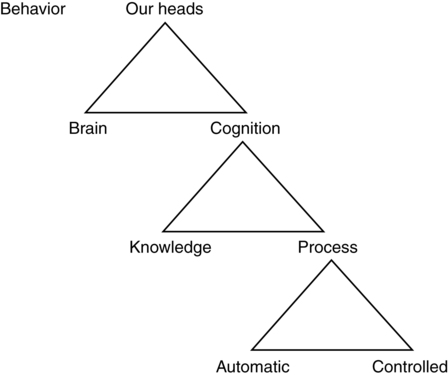

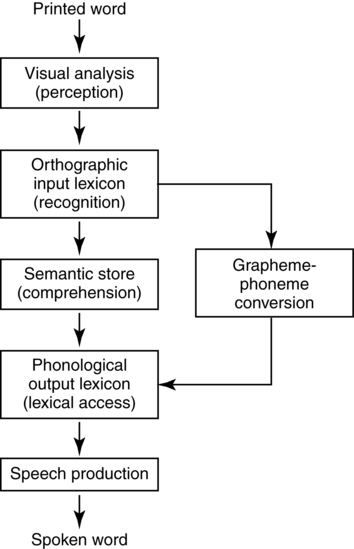

CHAPTER 1 Cognition is “an umbrella term for all higher mental processes . . . the collection of mental processes and activities used in perceiving, remembering, thinking, and understanding” (Ashcraft & Radvansky, 2010, p. 9). In contemplating their history, “cognitive psychologists generally agree that the birth of cognitive psychology should be listed as 1956” (Matlin, 2009, p. 7). Around this time, key publications and conferences steered psychology away from behaviorism. This change was driven by the Skinner-Chomsky debate over nurture versus nature, George Miller’s measure of short-term memory as being around seven units, and interest at Carnegie-Mellon University in the computer as an analogy for human information processing. The shift was complete when the Journal of Verbal Learning and Verbal Behavior became the Journal of Memory and Language in the early 1980s. Essentially, psychologists admitted that mental processes exist. This section introduces three of four assumptions underlying the study of mental processes. They are presented as a hierarchy of dichotomies in Figure 1-1. First, there is a working distinction between behavior (as evidence) and what happens in our heads (as theory). Similarly for clinical diagnosis, we consider the relationship of what we can observe (symptoms) to what we cannot observe (diagnosed impairment). Scientists avoid writing statements like “comprehension is a behavior” so that they do not think carelessly and confuse one for the other. Now that we are thinking inside the box, the second assumption differentiates the brain as a material thing from cognition as a mental thing. Because cognition is what the brain does and, therefore, is not truly independent of the brain, this dualism is largely a contrivance that is reflective of a research strategy. Cognitive psychologists approached their work as if “the mind can be studied independently from the brain” (Johnson-Laird, 1983). Through the 1980s, cognitive psychology texts barely mentioned the brain. At that time, Flanagan (1984) stated that cognitive psychologists “by and large, simply seem not to worry about the mind-brain problem.” This dualistic approach was necessary, because technology for observing the brain (e.g., fuzzy structural imaging) was not matching the constructs for measurement of mental operations. Now, with the emerging fine-tuned technologies of functional neuroscience (Cabeza & Kingstone, 2006; Gazzaniga, Ivry, & Mangun, 2008), current editions of texts on cognition include chapters on the brain and sometimes are regaled with colorful pictures from brain imaging (e.g., Ashcraft & Radvansky, 2010). Nevertheless, one can conduct experimental cognitive psychology without considering the brain and, as a result, can restrict theory to functional matters (e.g., how memory works, as opposed to how the brain works). In our everyday vocabularies, “brain” and “mind” often refer to the same thing. Yet, saying that someone has “lost his mind” does not mean that he has misplaced his brain (Box 1-1). In his text for speech-language pathologists, Davis (2007a) encouraged clear thinking by recommending that we keep what happens to the brain (e.g., stroke, trauma) logically distinct from what happens to cognition (e.g., aphasia, amnesia). We can say that stroke causes aphasia (not that aphasia causes stroke). Neurosurgeons treat the brain, speech-language pathologists treat cognition, and so on. Whether cognitive-language therapy re-wires the brain is a current question. At least, to understand the nature of aphasia, we should have some idea of what happens to cognition. Putting aside the brain, the third assumption focuses on cognition. Cognition consists of a fairly stable knowledge base and fleeting processes. This distinction was helpful when clinical pioneer Hildred Schuell proclaimed that what we do about aphasia depends on what we think aphasia is (Sies, 1974). A frequent question has been whether aphasia is an erasure of language knowledge or a disruption of language processing (while knowledge remains intact). The answer informs the broad approach to therapy, namely, whether it involves teaching words anew (because of a “loss” of knowledge) or exercising an impaired mental process that accesses a healthy store of vocabulary. Despite a layperson’s inclination to define aphasia as a “loss” of language, Schuell’s (1969) clinical experience led her to believe that “the language storage system is at least relatively intact” (p. 336). This belief, now supported by research, led her to advocate a “stimulation” approach to therapy. Just as archaeologists build models of Troy based on analysis of unearthed floors and walls, cognitive scientists construct the most likely “functional architecture” of the mind from hundreds of carefully crafted experiments. Theoretical models are helpful in characterizing phenomena that are too big, too small, too old, or too obscure to be observed in everyday experience or “with the naked eye” (Davis, 1994). A layperson’s idea of “theory” can be heard in putdowns such as “it’s only a theory,” as if to say that such an explanation is only a guess. A scientific theory, however, is a collection of coordinated hypotheses built from appropriate evidence (Stanovich, 2007). Appropriate evidence of global climate change, for example, would be long-term worldwide temperature trends, as opposed to looking out the window (Box 1-2). A fundamental tenet is that a theory should be falsifiable, meaning that “in telling us what should happen, the theory must also imply that certain things will not happen. If these latter things do happen, then we have a clear signal that something is wrong with the theory” (Stanovich, 2007, p. 20). A common type of nonfalsifiable theory is one that is so general that it can explain anything (see Shuster, 2004). Explanations that are hard to test, such as appealing to motivations, are also difficult to falsify. Several comparisons should produce a pattern of results consistent with a theory, and the comparisons should also allow for the possibility of other patterns that could be suggestive of another theory. What follows are some of the basic approaches to making these comparisons in cognitive science. A classic approach to experimentation is called mental chronometry or additive/subtractive methodology. Inspired by research to determine the speed of neural impulses, Franciscus Donders, a Dutch physician in the 1800s, used a subtraction method to measure the speed of mental operations in simple responses to lights. The experiment was a comparison between similar tasks. The general idea was that when one task takes longer, the difference in time is a measure of the operation that made the task take longer. In the 1960s, Sternberg (1975) worried that two tasks could differ in more than one way, spoiling theoretical interpretation. His solution, in order to study short-term memory scanning, included the comparison of several conditions differing in one respect (i.e., additive method). While mental chronometry was paving the way for disciplined study of human participants, the computer metaphor for the human mind encouraged studies of computational modeling or simulation (based on “connectionist models”). Implying a comparison to human beings, research consists of “programming computers to model or mimic some aspects of human cognitive functioning” (Eysenck, 2006). Applying simulation to clinical populations, some investigators would artificially lesion a program to mimic a disorder (Dell & Kittredge, 2011). Wilshire (2008) made note of one strength in the simulation approach that is favored by cognitive theorists in general, namely, theoretical parsimony, or explaining the widest range of observations with the fewest assumptions. A third approach is cognitive neuropsychology, in which brain-injured subjects are studied to test theories of cognition. Viewed broadly, this discipline can be any study of cognition involving brain-damaged people that has the goal of understanding normal function as well as dysfunction. In one branch of this field, “CN” focuses on single cases as examples of a lesion that hypothetically knocks out one component of a processing model underlying a simple task (Rapp, 2001; Whitworth, Webster, & Howard, 2005). A typical model of reading aloud given in Figure 1-2 provides a menu of possible impaired components. Although proponents of CN speak of testing theories of language in general, their research is restricted to single words. This narrow window on language and the absence of an automatic-controlled processing distinction were a concern for Wilshire (2008; also, Davis, 1989). She noted that computer simulation has an additional advantage of explaining how and the extent to which a cognitive component may be malfunctioning. “We use the term attention to describe a huge range of phenomena” (Ashcraft & Radvansky, 2010, p. 112). It is commonly considered to be a cognitive process that concentrates mental effort on an external stimulus or an internal representation or thought. Attending to external stimuli may be called “input attention,” which is the basic mechanism for selecting sensory information for cognitive processing. Input-directed attention includes an orienting reflex that directs us toward an unexpected stimulus and attention capture, which is driven by physical characteristics, namely, significance, novelty, and social cues. Ashcraft (1989) wrote that cognition is “the coordinated operation of active mental processes within a multicomponent memory system” (p. 39). A simple definition of memory is any retention of information in the mind beyond the life of an external stimulus (i.e., minimal memory). The ability to hold information in our head is fundamental to the mind’s (or brain’s) ability to perform even the simplest functions such as perception and recognition. Following the knowledge-process distinction mentioned earlier, the major components of the memory system consist of long-term memory (LTM) for passive storage of information and working memory (WM) for constraining the activity of processing. A library is a common analogy for characterizing our LTM system. A library acquires books, stores them, and has procedures for access and retrieval (i.e., input-storage-output). Like a library, LTM contains different types of information. Knowledge may have a verbal representation like novels and a photographic representation like picture books. Tulving (1972) proposed the following types of knowledge: • Episodic memory for individually experienced events (autobiographical memory) • Semantic memory for general conceptual knowledge of the world • Lexical memory for word forms and information about words Aphasiologists take particular note of the separate stores for words and world knowledge. The concept of trees may be a universal element of semantic memory, but the word for it varies from language to language and is stored in lexical memory. An aphasic person knows what he or she wants to convey but just cannot access the words. In general, the validity of these LTM stores is supported by many case studies showing that neuropathologies can impair access to one type of memory but not others (Schacter, 1996). Because we are most interested in language, let us focus on semantic memory. Its core is universal in the sense that most people have the same basic knowledge of objects and actions, living and nonliving things. Fringes of world knowledge vary according to locale, culture, or expertise. Semantic memory is central to comprehension and the meaningful use of words. In fact, it can be said that semantic memory contains word meanings. The simplest unit of semantic memory is a concept, which may be defined as the representation of a class of objects or actions. Although concepts are stored separately from words, the two stores are intimately connected (Box 1-3).

The cognition of language and communication

Assumptions in the study of cognition

Approaches to the study of cognition

Attention

Memory

Long-term memory

![]()

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree

The cognition of language and communication