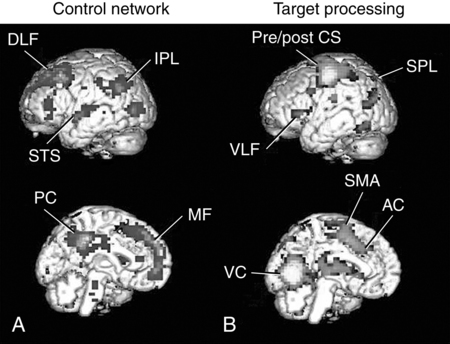

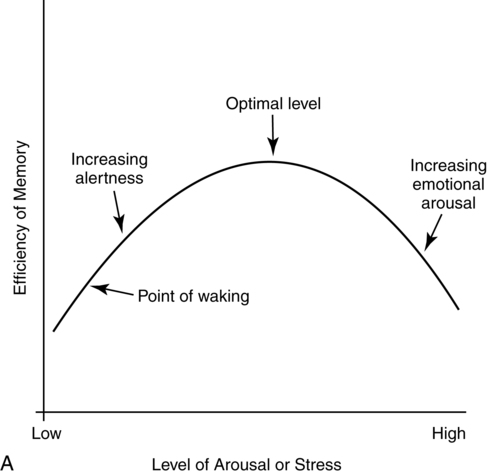

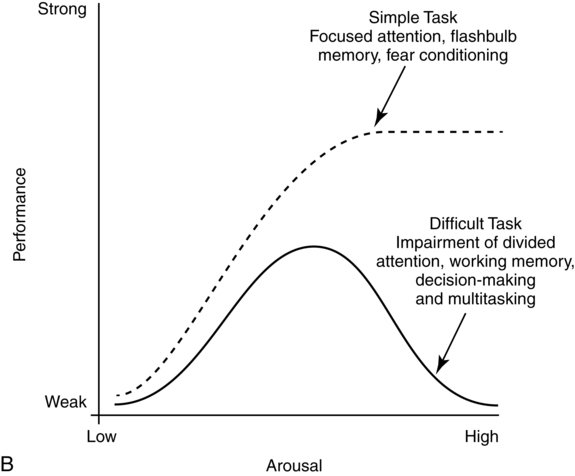

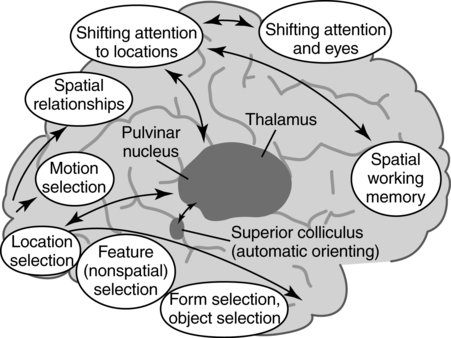

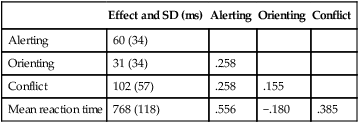

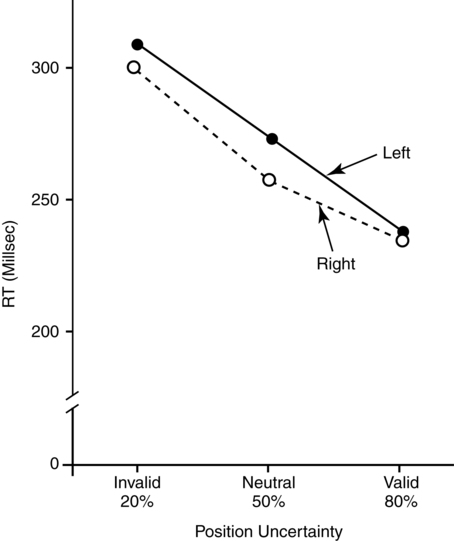

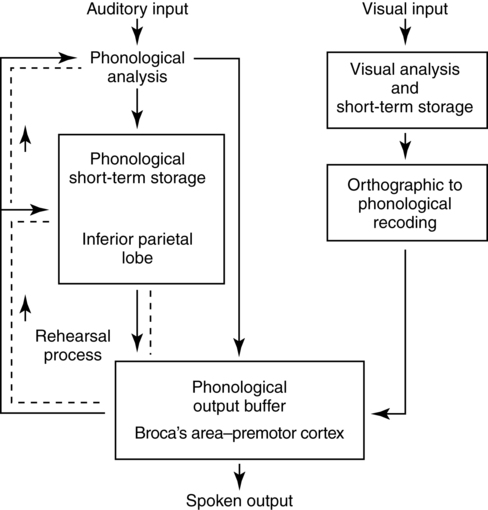

CHAPTER 4 Thomas H. Carr and Jacqueline J. Hinckley What sort of evidence might support such a claim? Kastner, Pinsk, De Weerd, et al. (1999) as well as Corbetta and colleagues (e.g., Corbetta, Kincade, Ollinger, et al., 2000) used functional magnetic resonance imaging (fMRI) to show that different brain regions were active in response to a spatial cue telling the participant where to look for an upcoming stimulus than were subsequently active when the stimulus actually appeared and was being perceived. The latter perceptual regions were activated more strongly if the cue had correctly signaled where the stimulus would appear and hence the upcoming location had become the focus of spatial attention. Activation of the regions that processed the cue—the “attentional regions”—predicted both the activation of the perceptual regions and the speed and accuracy of the behavioral responses in the task. Thus, different brain regions appeared to be generating attentional information based on the cue, sending out control signals that then modulated the operation of the perceptual regions actually responsible for processing the stimulus. An excellent example of this type of research comes from Hopfinger (2000), whose results are shown in Figure 4-1. What are the functions of attention? There has been much discussion of this question. For present purposes we follow the lead of Posner and Petersen (1990), distinguishing three major functions and attributing each to its own system of neural structures. System 1 is responsible for arousal, alerting, and vigilance. System 2 is responsible for orienting toward and selecting sources of information, both from the external perceivable world and from memory. System 3 is responsible for executive control and supervision of intentional, goal-directed task performances, and includes working memory. Using a procedure they called the Attention Network Test (ANT), Fan, McCandliss, Fossella, et al. (2005) combined three manipulations in a single task, one manipulation for each system. The ANT was administered in a magnetic resonance imaging (MRI) scanner, so that distributions of blood oxygen levels could be measured across the brain to indicate regional brain activation while the task was being performed. Blood oxygen levels vary in a fairly direct way with neuronal activity, so fMRI provides a good indication of where neurons are busy processing information. The ANT, shown in Figure 4-2, required the participant to focus attention on a fixation cross that was constantly present in the middle of the field of view on a computer display projected into the scanner. On each trial a target arrow appeared either directly above or directly below the fixation cross. The participant’s job was to indicate the arrow’s direction as rapidly and accurately as possible by pressing a button on the left if the arrow pointed left or on the right if the arrow pointed right. Box 4-1 summarizes the manipulations used in this study. We begin with the manipulation relevant to System 3—Executive Control and Supervision, because it is fundamental to understanding the logic of the ANT. Participants needed to respond to the direction of a target arrow, but the target arrow did not appear by itself. It occurred in the middle of a row of five arrows, two on either side, that were designated as irrelevant—the decision about direction was to be made on the basis of the middle arrow only. Thus, this task was a version of Eriksen and Hoffman’s (1972) “Flanker Task.” It is well established that if flanking stimuli are close enough in space to the target, they are difficult to ignore and they will get processed to some degree if attention is not focused on them very carefully. This is more likely to happen if the target stimulus is simple and easy to process and interpret (Lavie, 1995; 2006; see also Huang-Pollack, Nigg, & Carr, 2002), which of course an arrow certainly is, and of course the flanking arrows are also simple and easy to process. Reaction time differences for the effects of alerting, selective attention (“orienting” in the table), and executive control (“conflict” in the table), as well as the correlations among these differences, are shown in Table 4-1. Each manipulation produced an impact. Performance was 60 ms faster (about 7.5%) when alerted, 31 ms faster (about 3.8%) when given orienting information, and 102 ms slower (about 12.5%) when dealing with the conflict created by the incongruent flankers. None of the correlations among these difference-scores was significant, meaning that statistically, the effects were independent of one another. This is consistent with the hypothesis that they reflect the operation of independent processes, as suggested by Posner and Petersen’s (1990) view of the organization of the attentional systems. Table 4-1 * Correlation is significant at the .05 level (two-tailed). From Fan, J., McCandliss, B. D., Fossella, J., Flombaum, J. I., & Posner, M. I. (2005). The activation of attentional networks. NeuroImage, 26, 471–479. Mental energy levels and readiness to engage with a task are important to performance. Fan and colleagues (2005) found a specific neural substrate associated with the impact of an aspect of getting ready they called alerting—of receiving a specific warning that a task stimulus was coming soon. Kahneman (1973, p. 13) called the process of getting ready “the mobilization of effort,” and related it more generally to the concept of arousal—overall physiological activity level in the nervous system, reflected in measures such as heart rate, galvanic skin response, diameter of the pupil of the eye, and activity in the locus coeruleus or “reticular activating system.” Neurochemical studies have focused on pathways between locus coeruleus and cortex that rely on noradrenalin as the primary neurotransmitter, as well as pathways connecting basal forebrain, cortex, and thalamus that rely on acetylcholine and dopamine (Cools & Robbins, 2004; Parasuraman, Warm, & See, 1998; Ron & Robbins, 2003). It appears that pathways involving these neurotransmitter systems in anterior cingulate cortex, right prefrontal cortex, and thalamus are particularly important in modulating arousal and the ability to mobilize effort. First, readiness varies with the circadian sleep-wakefulness cycle. Work relating this cycle to the speed and accuracy of task performance has been summarized by Hasher, Zacks, and May (1999). Some people are “morning people” and others are “evening people” or even “night owls.” That is, the time of day at which people feel most energetic and prefer to engage in demanding mental or physical activity varies systematically from person to person. Such preferences are persistent and reliable enough to be measured psychometrically—for example, using Horne and Ostberg’s Morningness-Eveningness Questionnaire. Hasher and colleagues reported that people tested at their preferred or optimal time of day perform faster and more accurately in a wide range of tasks than if they are tested at earlier or later times, when they say they are likely to feel tired or would prefer less strenuous activity. Time-of-day preferences vary strikingly with age (Table 4-2). Young adults tend toward eveningness, whereas older adults tend rather strongly toward morningness. Testing younger and older adults in the evening exaggerates differences due to cognitive aging that generally favor young adults—young adults are more likely to be at or near their optimum times whereas older adults are wearing down. Testing in the morning reverses relative energy and readiness levels, with younger adults being at a down time and older adults being at or near their optimum time of day. The result is a reduction or even elimination of the age difference favoring younger adults, with the amount of reduction depending on the task. Table 4-2 Distribution of “Morning People” Versus “Evening People” as a Function of Age Rights were not granted to include this table in electronic media. Please refer to the printed book. Young adults ranged in age from 18 to 22 years. Old adults ranged in age from 66 to 78 years. From May, C. P., Hasher, L., & Stoltzfus, E. R. (1993). Optimal time of day and the magnitude of age differences in memory. Psychological Science, 4, 326<!– & –>ndash;330. A very similar pattern can be seen on a much smaller time scale in manipulations of alerting such as used by Fan and colleagues (2005). For example, Posner and Boies (1971) conducted a same-different matching task in which a pair of letters was presented sequentially. The participant’s job was to indicate as rapidly and accurately as possible whether the two letters were the same or different. Posner and Boies found that providing a warning signal in advance of the first letter speeded performance of the judgment. The amount of benefit, however, depended on how much in advance the warning signal occurred. The benefit increased over the first half a second or so, reaching a maximum with an interval of 400 to 600 ms between the onset of the warning signal and the onset of the first letter. This is typical of reaction-time tasks requiring relatively simple judgments. Beyond this optimum warning interval of about half a second, benefit declines over the next few seconds, suggesting that it is difficult to maintain maximum readiness for very long (Kornblum & Requin, 1984; Thomas, 1974). Extending readiness for longer periods—minutes or hours—proves to be a major problem. Failure under such demands for “vigilance” causes much human error in real-world task performance, especially when events that actually call for action are rare, or when the performer is at a nonoptimal part of the sleep-wakefulness cycle (Parasuraman et al., 1998; Thomas, Sing, Belenky, et al., 2000). Under sleep deprivation, performance becomes highly variable. Some stimuli elicit a normal response, some a very slow response, and some are missed altogether (Doran, Van Dongen, & Dinges, 2001). The general shape of the resulting function is reflected in what has come to be called the Yerkes-Dodson Law, which relates level of arousal to the quality of performance. As shown in Figure 4-3, A, there is in general an optimum level of arousal and resultant readiness at which performance of any given task is maximized. Arousal levels that are lower or higher than this result in poorer performance. As shown in Figure 4-3, B, the optimum level of arousal varies by complexity of the task. In the simplest tasks, high levels of arousal are helpful. As task complexity increases, high levels of arousal become harmful. Progressively lower arousal levels are required for best performance as the number of component processes increases, or larger amounts of information must be attended to or maintained in working memory. But for any task, arousal levels substantially lower than the optimum depress performance, as do levels substantially higher than the optimum, though perhaps for different reasons. Low arousal results in sluggishness or even drowsiness, leading to poor preparation and readiness to perform. Very high arousal appears to restrict attention and interfere with executive control and decision making (for discussions of the Yerkes-Dodson function, see Anderson, 1994; Kahneman, 1973; Yerkes & Dodson, 1908). A simple fact of mental life is that more information is available in the environment than a human being can take in, notice, interpret in a meaningful fashion, act upon, or store in memory. That is the reason for studies and theories of selective attention, the processes that regulate the flow of information, give priority, reduce priority, or perhaps even produce inhibition, so that the next time the stimulus occurs, it will be harder to perceive than it was before (Dagenbach & Carr, 1994; Keele, 1973; Pillsbury, 1908; Posner & Petersen, 1990; Treisman, 1969, 1988). As Keele (1973, p. 4) put it, “One use of the term attention implies that when a person is attending to one thing, he cannot simultaneously attend to something else. Typing is said to require attention because one cannot simultaneously type and participate in conversation. Walking is said to require very little attention because other tasks performed simultaneously, such as thinking, interfere very little with walking.” Typing and walking are complicated activities extended in time. Much less complex perceptual and sensorimotor tasks show the impact of attention. One of the simplest and most widely used methods of isolating and measuring selective attention was applied in the Attentional Network Task of Fan and colleagues (2005). Stimuli could appear at two different locations in the visual environment—and even this limited variety taxed the perceiver’s capacity to some extent, as was evidenced by the benefit that arose when a cue directed attention to the upcoming stimulus location. Giving the perceiver the ability to select and monitor the relevant location from which information would be arriving proved to be helpful. Fan’s experiment did not measure what would have happened if the cue had been misleading—signaling and hence calling attention to a location that was not where the stimulus would ultimately appear. Other studies give the answer. Performance is hurt, with reaction time and/or accuracy falling below what would have been achieved with no cue at all. Both effects—the facilitation or benefit from a valid cue and the inhibition or cost from an invalid cue—are illustrated in Figure 4-4. Posner and Petersen (1990), using visual attention as an example, suggested that orienting and selecting a source of input is built around three component operations implemented in sequence. This is the “functional architecture” of selective attention, consisting of “disengage,” “move,” and “engage.” Each operation is accomplished by a different region of neural tissue (the “functional anatomy” of selective attention). Posner and Petersen hypothesized that the “disengage” operation is supported by left and right posterior parietal cortex (and, as we will see, by neighboring posterior regions of superior temporal cortex). The disengage operation implements a decision to curtail the priority being given to input from the location on which attention is currently focused. The decision to give up a source of input as a priority in order to shift to another source might be made intentionally by executive control processes in working memory and transmitted as a top-down goal-directed instruction to parietal cortex, or it might arise from bottom-up signals driven by the sudden onset of a new, high-intensity, or potentially important stimulus in the environment. This difference between top-down or endogenous control and bottom-up and stimulus-driven exogenous control was not explicitly built into Posner and Petersen’s anatomical model, but it is necessary to the story. Exogenous control is also called automatic or reflexive attention capture and has been the topic of considerable research (Folk, Remington, & Johnston, 1992; Folk, Remington, & Wright, 1994; Schriej, Owens, & Theeuwes, 2008; Yantis, 1995). In either case, implementing a shift of attention from its current focus to a new one begins with disengaging from the current focus. But after disengagement, where is attention going and how does it get there? The second operation is “move,” implemented via interactions between posterior parietal cortex and superior colliculus, both of which contain topographic maps of environmental space. Single shifts of attention appear to be the responsibility of more inferior regions of parietal cortex and neighboring temporal cortex interacting with superior colliculus, whereas a planned sequence of attentional foci, moving systematically from one location to another, appears to recruit additional parietal regions that are more superior (Corbetta, Shulman, Miezen, & Petersen, 1993). Since 1990, the functional anatomy of the “posterior attention system,” as Posner and Petersen called this network of regions responsible for selective attention, has grown more elaborate. Figure 4-5, borrowed from a widely used textbook in cognitive neuroscience (Gazzaniga, Ivry, & Mangun, 2009), assigns a larger array of attention-relevant functions to posterior anatomical regions in accord with this elaboration. Effects much like those observed in vision are obtained in studies of audition. A cue to the location from which sounds or speech will come facilitates detection and identification. A misleading cue that causes perceivers to orient attention to the wrong location harms detection and identification (Mondor & Zatorre, 1995). There is a tight relationship between visual and auditory attention. Studies by Driver and Spence (e.g., 1994, 1998) show that visual cues attract auditory attention and vice versa, though these cross-modality cues may not be as powerful as within-modality cues. Cross-modal costs and benefits suggest a partially overlapping hierarchical organization, such that each sensory modality has to some degree its own dedicated piece of the attentional system, but these dedicated components in turn feed into and are modulated by a more general system that serves multiple modalities. Cross-modal interactions are particularly important in speech perception. Visual information from lip configurations impacts the identification of spoken syllables and words—congruent information in which the lip configuration corresponds to the sound that is heard facilitates identication, whereas incongruent lip configuration and auditory informaton impedes identification. This is called the “McGurk Effect” (McGurk & McDonald, 1976; see also, e.g., Jones & Callan, 2003; Rosenblum, Yakel, & Green, 2000). In a study that required selective repetition of one of two auditory messages—one presented from the left side of space and the other from the right—Driver and Spence (1994) found that a video of the to-be-repeated speaker helped more if it was presented on the same side as the message was coming from. Thus, being able to align spatial attention in the visual and auditory modalities facilitates integration of the information. The mechanics of how attention intervenes to modulate information processing have been debated for a long time. In 1995 Desimone and Duncan (see also Duncan, 2004) proposed that attention operates directly on pathways of information flow and representations of information, adding activation to a pathway or representation in order to give it priority over others. According to this hypothesis, pathways that are irrelevant to the goal being served are ignored by attention and therefore left to their own devices. They may or may not become activated, but if they do, they are unlikely to become more active than the ones biased by the boost supplied from attention. Some might, however, which creates a mechanism for exogenous control of attention—the unintended reflexive shifts of attention discussed earlier. These “hyperactivating” stimuli include newly appearing stimuli with sudden onsets (Klein, 2004; Yantis, 1995), familiar stimuli with a long history of importance (Cherry, 1953; Treisman, 1969; Carr & Bacharach, 1976), as well as stimuli with properties that make them very easily recognizable as relevant to a current task goal, such as a particular color or shape (Folk, Remington, & Johnson, 1992; Folk, Remington, & Wright, 1994; Schreij, Owens, & Theeuwes, 2008). Of course speech is an auditory signal that can attract attention. However, once speech begins, internal features further moderate the listener’s attention. Hesitations during otherwise fluent speech serve to orient attention to the subsequent word. In a study involving ERPs, Collard, Corley, MacGregor, and Donaldson (2008) manipulated spoken sentences that ended with either a predictable or unpredictable word. In half of the cases, the sentence-final word was preceded by a hesitation, “er.” The ERP results suggested that the hesitations helped to orient attention to the preceding word, thus decreasing the novelty (P300) response when the sentence-final word was unpredictable. This example showcases the important role of orienting and selective attention in understanding spoken language. A foundation is laid early in infancy for communicative interaction to direct attention. From birth, mothers and fathers look at the faces of infants during many of their interactions (though by no means all). In these face-to-face interactions, adults will follow the gaze of the infant and respond to it vocally, asking simple (but possibly quite developmentally profound) questions like “Oooh—what do you see?” Later on, adults will say things like “Oh, look at that” and accompany the directive command with a shift of gaze and a point. By 12 to 18 months of age, infants will follow these gazes and points, orienting head, eyes, and presumably attention in their direction. Only slightly later, infants will themselves look, point, and vocalize in apparently intentional ways during social interactions, trying to direct the attention of their interactional partner. These changes during the first year and half of life have been researched as the development of shared attention and joint regard. Bruner (1975) and Bates (1976) argued that these developments are important precursors both to the attentional powers of language and to one of the fundamental uses of communication (for a review, see Evans & Carr, 1984). Where the head is turned and where the eyes are looking continue to be important indicators of attention throughout life, and have been used increasingly as real-time indicators of language comprehension (Henderson & Ferreira, 2004; Tanenhaus, 2007). A great boon to this research was the invention of small and lightweight eye-movement monitoring equipment that can be worn like a hat while carrying out a task that might involve reaching for objects, picking them up and moving them around or using them in response to instructions, or walking from one place to another (Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy, 1995). More generally, it is quite clear that descriptions of the visual environment serve to direct the listener’s attention. There are multiple consequences—establishment of joint regard and correct following of instructions among them. A lasting consequence involves the memories that the hearer of a description carries away from the experience. Bacharach, Carr, and Mehner (1976) showed fifth-grade children simple line drawings, each containing two objects interacting—a bee flying near a flower, a boy holding up his bicycle, and so forth. Before each picture, an even simpler description was provided: “This is a picture of a bee,” or “This is a picture of a bicycle.” After the list was completed, a memory test was administered, in which pictures of single objects were shown one at a time. The child’s job was to say for each object whether it had been in the objects seen in the pictures. A baseline condition with no descriptions established that all the objects were equally likely to be remembered. With a description before each picture, however, objects that had been named in the descriptions were remembered more often than baseline, whereas objects that had not been named were remembered less often. An easy introduction to System 3—Executive and Supervisory Control is to quote from the investigator most closely associated with the system’s other name, Working Memory. As Baddeley (2000) puts it: The term working memory appears to have been first proposed by Miller, Galanter, and Pribram (1960) in their classic book Plans and the Structure of Behavior. The term has subsequently been used in computational modeling approaches(Newell & Simon, 1972) and in animal learning studies, in which the participant animals are required to hold information across a number of trials within the same day (Olton, 1979). Finally, within cognitive psychology, the term has been adopted to cover the system or systems involved in the temporary maintenance and manipulation of information. Atkinson and Shiffrin (1968) applied the term to a unitary short-term store, in contrast to the proposal of Baddeley and Hitch (1974), who used it to refer to a system comprising multiple components. They emphasized the functional importance of this system, as opposed to its simple storage capacity. It is this latter concept of a multicomponent working memory that forms the focus of the discussion that follows. And so it does. The topics of Working Memory and Executive Control are treated in detail in Chapters 5 and 7 of this book. Here we raise matters most relevant to issues raised later in this chapter, which include training of attention, skill acquisition, and the impact on attention and performance of motivation, emotion, self-concept, and pressure. The basics of the functional architecture of working memory can be seen in Figure 4-6. The pieces of mental equipment that comprise this multicomponent system are the executive controller plus three “buffers” or short-term-storage devices. One of these buffers is the “phonological loop,” which maintains a small amount of verbal information for rehearsal (for example, three to nine unrelated syllables, possibly more if the sequence consists of related words that can be chunked into groups). The exact capacity is debated (e.g., Carr, 1979; Cowan, 2000; Jonides, Lewis, Nee, et al., 2008; Miller, 1956), but there is wide agreement that rehearsal is needed to keep the information active and readily available. Once attention is turned to other activities, stopping rehearsal, then the information being rehearsed either fades or is replaced by new material to which attention has been turned. A second buffer is the “visuospatial sketchpad,” which fulfills an analogous function for visual information—storing images rather than sounds, syllables, or words. An analogous debate has been waged over the capacity of the visual storage buffer, with perhaps slightly smaller estimates of capacity (Alvarez & Cavanagh, 2004; Awh, Barton, & Vogel, 2007; Jonides, Lewis, Nee, et al., 2008; Vogel & Machizawa, 2004; Xu & Chun, 2006). The third storage subsystem, which is the main focus of the caption in Panel A, is the “episodic buffer.” The episodic buffer is a late addition to the theory of working memory (although it bears kinship to the concept of “long term working memory” described by Ericsson & Kintsch, 1995). What is the functional anatomy or brain circuitry of working memory? Pioneering work on verbal versus spatial working memory by Smith and Jonides (1997) showed that verbal working memory was heavily left lateralized, as one might expect from the neuropsychological data on brain injury (Banich, 2004, Chapters 10 and 11). In this important study, a task requiring short-term memory for letters activated a large region of tissue in left prefrontal cortex and a smaller region in left parietal cortex. Subsequent neuroimaging research using fMRI rather than PET has been quite consistent with Smith and Jonides’ early findings. In contrast to verbal working memory, visuospatial working memory is heavily right-lateralized, also as might be expected from the neuropsychology of brain injury (Banich, 2004, Chapters 7 and 10). A task requiring short-term memory for the spatial locations of a pattern of dots activated a small region of tissue in right prefrontal cortex and a larger region of tissue in right parietal cortex. One might speculate from this comparison that for dealing with verbal materials the “thinking” part (attributed to frontal cortex) is more demanding than the “storage of data” part (attributed to parietal cortex), whereas the reverse might be true when dealing with spatial materials. Storage of spatial data appears to take up lots of brain space, compared to storage of verbal data. This is only a speculation about human beings, but it does hold true for computers and smart-phones. Visuospatial materials take up a lot of storage room compared to text or speech. The importance of working memory in language has been a matter of debate. Most theories of language and reading comprehension give working memory a seat at the table, though what it is supposed to do can vary from theory to theory (Caplan & Waters, 1995; Daneman & Merikle, 1996; Snowling, Hume, Kintsch & Rawson, 2008; Perfetti, 1985). Much the same holds for language production (Eberhard, Cutting, & Bock, 2005; Bock & Cutting, 1992). Gruber and Goschke (2004) argue in favor of a domain-specific and heavily left-lateralized portion of the working memory system devoted specifically to language. Baddeley (2000) reviews a variety of evidence regarding the role of working memory in language learning, acquiring a second language, and how working memory might be compromised in language disorders such as specific language impairment. What is currently agreed about the functional anatomy of the phonological loop—the specifically verbal storage and processing component of the working memory system—is shown in Figure 4-7. Pursuing the question of whether the phonological loop is involved in any aspect of speech production, Acheson, Hamidi, Binder, and Postle (2011) have established a direct link between verbal working memory and the phonological/articulatory demands on one act of speech production—reading aloud. Using transcranial magnetic stimulation (TMS) to temporarily scramble neural signals from highly localized subregions of the cortex. They attempted to disrupt performance in three different tasks. One of these tasks—paced reading, in which participants had to read lists of phonologically similar pseudowords aloud—was chosen because it ought to draw on the specifically phonological and articulatory resources of working memory. Acheson and colleagues predicted from previous work on the functional anatomy of working memory that TMS administered to the posterior superior temporal gyrus (a region involved in a variety of reading functions and implicated in verbal working memory and also in the phonological loop) ought to harm paced reading. As can be seen in Figure 4-8, this is exactly what they observed. Pillsbury allowed that both might be at work, and this is the position taken by most current theories of attention. Sometimes inhibitory processes need not be engaged, but when conflict and confusion arise, or when the interpretation of the situation changes so that “old news” needs to be discarded in favor of new goals or new information, then inhibition comes into play. The engagement of inhibitory processes is thought to be triggered by conflict-monitoring operations that run in the background of all task performances (see Botvinick, Cohen, & Carter, 2004). Detection of conflict or ambiguity alerts the executive and supervisory control system, which slows performance so that more care can be taken, updates goals to be pursued, deletes now-irrelevant information so as to reduce “cognitive clutter” (Hasher, Zacks, & May, 1999), and allows the system to resolve the conflict or deal with the ambiguity. A compelling and much-studied example of dealing with conflict comes from the Stroop Color-Naming Task (Cohen, Aston-Jones, & Gilzenrat, 2004; MacLeod, 1991; Stroop, 1935). This task presents words printed in different colors. One might see the word “blue” printed in blue, the word “red” printed in blue, the word “chair” printed in red or in blue, or one might simply see a patch of color. In all cases, the job is to name the color of the stimulus—not to read it if it is a word. Relative to naming the color of a patch of color, which is the simplest color-naming task imaginable, any stimulus that includes a word slows performance, at least for skilled readers. It is as if just having a word available to perception creates dual-task interfence in which it is difficult to ignore the word or to try to perform its automatically associated task of reading (Brown, Gore, & Carr, 2002). Work on communications patterns among different brain regions—called “effective connectivity” or “functional connectivity”—shows that ACC participates in a network with other prefrontal regions and portions of parietal cortex to process conflict and also to deal with surprising stimuli, as when an unexpected or rare stimulus occurs that requires a response (Wang, Liu, Guise, et al., 2009). It appears that surprising stimuli are recognized in parietal cortex, especially the intraparietal sulcus, which signals ACC and dorsolateral prefrontal cortex (DLPFC) with the news that something unexpected requires scrutiny and possibly action. Recognizing and adjusting for conflict works in the opposite direction—ACC and DLPFC serve as sentinels and interact to determine what processing adjustments need to be made in executive and supervisory control. This prefrontal collaboration includes signaling parietal cortex about changes to be made in selective attention. Thus, parietal cortex initiates network activity in the processing of surprise stimuli, whereas ACC and DLPFC interact with each other to resolve conflict by changing the deployment of attention during task performance. As might be expected from earlier discussion of partial modality-specificity and partial modality-overlap between visual and auditory attention, monitoring and adjusting for conflict also depends to some degree of the sensory modality in which stimuli are being processed. Roberts and Hall (2008) compared a standard visual Stroop color-naming task, as described above, to an auditory Stroop-like task with similar demands for management of conflict. The auditory Stroop task presented participants with the words “high,” “low,” or “day”—the neutral equivalent of a patch of color. These words were spoken in a high-pitched or a low-pitched voice. The participant’s job was to indicate the pitch of the voice. Thus, the congruent condition was a high-pitched voice saying the word “high” or a low-pitched voice saying the word “low,” whereas the incongruent condition eliciting conflict was the high-pitched voice saying “low” or the low-pitched voice saying “high.” Imaging data from fMRI conducted during task performance showed shared regions of activation corresponding to the basic conflict monitoring and adjustment already described, plus additional modality-specific regions appearing only in the visual Stroop task or the auditory Stroop task.

Attention: architecture and process

The attention systems of the human brain

Attention is separate

Attentional functions

The attentional network test

The three manipulations

Effect and SD (ms)

Alerting

Orienting

Conflict

Alerting

60 (34)

Orienting

31 (34)

.258

Conflict

102 (57)

.258

.155

Mean reaction time

768 (118)

.556

−.180

.385

System 1—arousal, alerting, and vigilance: preparing for task performance

Neurotransmitter-defined pathways of arousal, alerting, and vigilance

Circadian rhythm

Alerting

Vigilance

Yerkes-dodson law

System 2—selective attention: orienting and selecting information

The costs and benefits of selective attention

The functional architecture and functional anatomy of selective attention

Auditory selective attention

Cross-modal attention and speech

How does selection work? biasing activation among representations

Signals from the speaker call the listener’s attention to language

The development of shared attention via looking and pointing

Eye movements give a real-time view of language comprehension

The impact of descriptions measured with memory

System 3—executive and supervisory control: managing goals and tasks

The functional architecture and functional anatomy of working memory

Working memory and language

Conflict monitoring and inhibitory regulation

![]()

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree

Neupsy Key

Fastest Neupsy Insight Engine