CHAPTER 5 Ever since the early days of psychology, when the discipline was more akin to philosophy than science, thinkers who concerned themselves with the phenomenon of memory found cause to make distinctions based on the type of information held in that memory. For example, the French philosopher Maine de Biran proposed three distinct memories, which he referred to as mechanical, sensitive, and representative, each depending on different mechanisms and characterized by different properties (Maine de Biran, 1804/1929). According to Biran, mechanical memory involved the acquisition of motor and verbal habits and operates unconsciously; sensitive memory involves feelings and affect and also operates unconsciously; and representative memory involves the conscious recollection of ideas and events. A second early distinction was made by William James in his seminal text Principles of Psychology (1890), where he focused on temporal properties of particular memories, contrasting elementary memory (also called primary memory) and secondary memory. He wrote: The multiple memory systems approach focuses on identifying functionally and anatomically distinct systems, which differ in their “methods of acquisition, representation, and expression of knowledge” (Tulving, 1985, p. 3). There are a number of different versions of this approach. For example, Squire (2004; Squire & Zola-Morgan, 1988) suggested that the most fundamental distinction is between declarative and nondeclarative memories. Declarative memory is what is usually meant by the term memory in ordinary language, and is the kind of memory impaired in amnesia, that relating to the conscious recollection of facts and events. For this reason it has also been termed explicit memory. It provides a representational vocabulary for modeling the external world, and the resulting models can be evaluated as either true or false with respect to the world. It is typically assessed by tests of recall, recognition, or cued recall. In contrast, nondeclarative memory is actually a catch-all term referring to a variety of other memories, including most notably procedural memory. Nondeclarative (or implicit) memories have in common that they are expressed through action rather than recollection. As such, they are not true or false, but rather reflect qualities of the learning experience. Strong evidence in support of this distinction comes from studies of amnesic patients from as early as Milner (1962), who demonstrated that patient H. M. could learn a mirror drawing task (invoking procedural memory), but displayed no memory of actually having practiced the task before (a declarative memory). Additional demonstrations have shown normal rates of learning in a variety of skills without conscious awareness that the learning has taken place (cf. Squire, 1992, for a review). Studies from brain damaged patients and animal models point to medial temporal lobe structures, including the hippocampal region and the adjacent entorhinal, perirhinal, and the parahippocampal cortices as crucial for establishing new declarative memories (Buckner & Wheeler, 2001; Squire, Stark, & Clark, 2004). These structures are significant because they receive multi-modal sensory input via reciprocal pathways from frontal, temporal, and parietal areas, enabling them to consolidate inputs from these regions (Alvarez & Squire, 1994; McClelland, McNaughton, & O’Reilly, 1995). A hallmark of damage to the medial temporal lobe is profound forgetfulness for any event occurring longer than 2 seconds in the past (Buffalo, Reber, & Squire, 1998), regardless of sensory modality (e.g., Levy, Manns, Hopkins, et al., 2003, Milner, 1972; Squire, Schmolck, & Stark, 2001). In addition, impairment in recollection of declarative memories can occur despite intact perceptual abilities and normal performance on intelligence tests (Schmolck, Kensinger, Corkin, & Squire, 2002; Schmolck, Stefanacci, & Squire, 2000), lending support to the idea that declarative memory may constitute a separable memory system. Over time, however, memories become largely independent of the medial structures, and more dependent on neocortical structures, especially in the temporal lobes. In contrast, there is no specific brain system related to establishing nondeclarative memories, as the category includes a variety of different types of memories. For example, creation of memories via classic conditioning depends on the cerebellum and amygdala (e.g., Delgado, Jou, LeDoux, & Phelps, 2009; Thompson & Kim, 1996), while procedural learning depends on the basal ganglia, especially the striatum (e.g., Packard, Hirsh, & White, 1989; Poldrack, Clark, Pare-Blagoev, et al., 2001; Salmon & Butters, 1995; Ullman, 2004). Figure 5-1 provides a summary of the subtypes of memory falling under each of these two distinctions, together with the primary brain structures that have been shown to support these memories in humans and experimental animals. A second frequently cited taxonomy of memory systems is that developed by Tulving and colleagues (Schacter & Tulving, 1994; Tulving, 1983). This approach is particularly concerned with establishing a distinction between two subtypes of declarative memory: semantic and episodic memory, distinguished by the relation of a particular piece of knowledge to a particular individual. For example, the knowledge that pizza is made with cheese and tomato sauce would reside in semantic memory, and would be shared by everyone, while the knowledge that Andrew had two slices of mushroom pizza and a soda for lunch would reside in episodic memory and be held only by Andrew and those who ate together with him. In addition to these two separate memory systems, these researchers have argued for the functional and neurological distinctness of three others: perceptual representation system (PRS), procedural memory, and working memory (WM). These first two would be considered nondeclarative memories under the taxonomy suggested by Squire and colleagues, and WM would be considered as a separate memory system all together according to this approach. We discuss the behavioral and neurological properties of each of these five systems in turn. Semantic memory is generally assessed through object naming (“This is a picture of a ____”), and queries that require access to world knowledge (i.e., the Pyramids and Palm Trees test (Howard & Patterson, 1992) in which individuals must decide what type of tree is most associated with an Egyptian pyramid. Synonym generation tasks, in which patients are asked to name as many exemplars of a provided category in 1 minute, have also been used to evaluate fluency and speed of accessing categories of information. In addition to storing facts about the world, semantic memory is the repository for linguistic knowledge about words, including phonological (e.g., that the word night rhymes with kite), morphological (e.g., that taught is the past tense of teach), grammatical (e.g., that hit takes a direct object), and semantic properties (that sleep and snooze are synonyms). This knowledge has been referred to as the mental lexicon (Ullman, 2004; see also Chapter 6).___________________________________ Cognitive psychology has long been interested in the organization of the mental lexicon, especially its semantic aspects, and the means through which it supports comprehension and communication (cf. Murphy, 2002, for a review). That knowledge is organized has been demonstrated experimentally in numerous studies observing correlations between reaction times to verify relationships between concepts and their degree of relation. For example, the early study of Collins and Quillian (1969) found that participants took less time to verify the statement “A canary is a bird” compared to “A canary is an animal.” They interpreted this result as evidence for a hierarchical representation of concepts, such as that presented in Figure 5-2, where relationships between categories are represented by solid lines and properties of individual objects are represented by dashed lines. Since the concept canary is closer to bird than to animal, they reasoned that the faster reaction time was possible because there were fewer links to traverse in order to verify the statement. Later research revealed a situation not so simple as this, as statements about items that are more typical of a category, such as “A robin is a bird” were judged more quickly than atypical exemplars, such as “An ostrich is a bird,” despite the fact that they are both located at the same level of the conceptual hierarchy (Rips, Shoben, & Smith, 1973; Rosch & Mervis, 1975). With this result, it became clear that knowledge organization reflects not just static or logical relationships between concepts, but also an individual’s experience with the world. There is now a substantial amount of evidence from neuroimaging techniques (e.g., PET, fMRI) that experience with the world determines how the mental lexicon is stored in the brain. For example, reading action words that are semantically related to different body parts (e.g., “kick,” “pick,” “lick”) activates regions of the motor and premotor cortex responsible for controlling those body parts (Aziz-Zadeh, Wilson, Rizzolatti, & Iacoboni, 2006; Hauk, Johnsrude, & Pulvermuller, 2004; Pulvermuller, 2005; Tettamanti, Buccino, Saccuman, et al., 2005). Similarly, reading or naming words associated with tool actions (e.g., hammer) activate a network of sensorimotor regions also engaged when perceiving and using tools (Chao, Haxby, & Martin, 1999). In addition to the influence of embodiment, a variety of other properties of objects in the world appear to have dedicated temporal lobe regions in which they are processed, and accessing words associated with these properties activates adjacent brain regions. For example, color and motion perception are associated with separate regions in the left ventral and medial temporal lobe, respectively (Corbetta, Miezin, Dobmeyer, et al., 1990; Zeki, Watson, Lueck, et al., 1991). In a study in which participants were shown achromatic pictures of objects (e.g., line drawing of a pencil), and asked to generate color words (e.g., “yellow”) and action words (e.g., “write”) related to these objects, Martin, Haxby, Lalonde, et al. (1995) found that regions just anterior to the ventral and medial lobe regions just mentioned were active. Similar findings have been observed for size and sounds of objects (Kellenbach, Brett, & Patterson, 2001), as well as grammar related properties such as the animate/inanimate distinction (Chao, Haxby, & Martin, 1999). Taken together, these results suggest that knowledge in the mental lexicon is represented by a distributed network of features processed primarily in the temporal lobes, with different object categories eliciting different patterns of activation among relevant features (Martin & Chao, 2001; McClelland & Rogers, 2003). In addition to these temporal regions, neuroimaging suggests that the retrieval and selection of information in the mental lexicon is managed by the left ventrolateral prefrontal cortex, corresponding to the inferior frontal gyrus (including Broca’s area) and Brodmann’s areas 44, 45, and 47 (cf. Bookheimer, 2002; Thompson-Schill, 2003, for reviews). These areas will also become relevant later in the discussion of the interaction of memory and syntactic processing. The existence of a separate episodic memory system appears to receive strong motivation from data from amnesic patients, who have specific deficits in episodic memory with very few, if any, deficits in the other memory systems. Such pathology suggests that episodic memories should be dissociable from other types of memories, and may occupy a neurologically distinct region in the brain (Tulving, 2002, p. 12). Assessment of episodic memory proves difficult, however, since the personal nature of these memories limits the ability of experimenters to manipulate them and evaluate the correctness of responses. Consequently, many studies investigating episodic memory utilize list-learning paradigms, which give experimenters complete control over properties of the to-be-remembered stimuli. Participants are presented with a list of words (or visual items such as faces or patterns) and asked to report on various incidental properties of them during an encoding phase (e.g., whether presented in upper or lower case letters, in particular colors, with a particular other word, or even whether it occurred at all). The subsequent retrieval phase then asks them to make judgments about whether items have been seen before (recognition) or to produce the item or its associates (recall), and sometimes to specify whether they consciously remember learning the word during the study phase or not (remember/know judgment). These paradigms enable experimenters to directly examine the conditions that lead to the successful creation of memories. For example, a group of studies have investigated “subsequent memory effects” in which sets of items that have been identified via post-hoc memory tests as having been successfully remembered are contrasted with those that have not been remembered (e.g., Rugg, Otten, & Henson, 2002; Wagner, Koutstaal, & Schacter, 1999). The goal was to uncover brain regions specifically involved in task-invariant episodic encoding, however such a region has so far resisted identification. Instead, the main result from these studies is that the pattern of brain activation associated with a particular memory differs depending on the type of processing engaged during study (e.g., Kelley, Miezin, McDermott, et al., 1998; McDermott, Buckner, Petersen, et al., 1999; Otten & Rugg, 2001; Wagner, Poldrack, Eldridge, et al., 1998). Thus, words encoded via a semantic task (i.e., judging whether a word is animate) activate areas of the medial prefrontal cortex and in the dorsal part of the left inferior frontal gyrus, which has been linked to semantic WM (e.g., Buckner & Koutstaal, 1998; Gabrieli, Poldrack, & Desmond, 1998; Wagner et al., 1998). Words encoded via a syllable counting task, on the other hand, failed to activate any prefrontal areas, and instead showed activations in bilateral parietal and fusiform regions and in the left occipital cortex, areas that have been implicated in phonological processing tasks (e.g., Mummery, Patterson, Hodges, & Price, 1998; Poldrack, Wagner, Prull, et al., 1999; Price, Moore, Humphreys, & Wise, 1997). Such task-specific activations are consonant with the idea that a memory for a particular stimulus includes a variety of incidental information about the context in which it was remembered—even including subjective factors such as mood or cognitive state. Thus, episodic memories—like semantic memories—are represented in the brain as distributed networks of activation, pointing to the need for a more refined explanation of episodic amnesia than simply to look for the region that houses them. One approach is the idea that damage must be specific to the mechanism through which these ideas are reactivated (wherever they may be stored), and not the means through which they are stored. Indeed, Tulving and Pearlstone (1966) pointed out that much of what we commonly view as memory loss—a memory no longer being available—is in fact more properly viewed as a failure in accessibility. Subsequently, Tulving (1979) formulated the encoding specificity principle, which states “[t]he probability of successful retrieval of the target item is a monotonically increasing function of information overlap between the information present at retrieval and the information stored in memory” (p. 408). Indeed, a recent survey of neuroimaging research concludes that the same brain areas are active both at encoding and retrieval (Danker & Anderson, 2010). One demonstration of this idea is the classic study by Thomson and Tulving (1970), who observed the expected result when no associate for the target word flower was present during the study phase: a strong associate presented at test (bloom) elicited recall of flower better than no associate or than a weak associate (fruit) presented at test. But when the weak associate is presented during the study phase, the presence of this same weak associate at test produces markedly better recall than when the strong associate is presented (73% versus 33% correct recalls). Thus, the effectiveness of even a longstanding cue, drawn from semantic memory, depends crucially on the processes that occurred when particular episodic memories are created. While the foregoing discussion has centered around studies of memory per se, evidence for the role of encoding context and its interaction with the information available at retrieval has also been observed in studies of language comprehension. In order to isolate the importance of encoding versus retrieval operations, Van Dyke and McElree (2006) manipulated the cues available at retrieval during sentence processing while keeping the encoding context constant. We tested grammatical constructions in which a direct object has been displaced from its verb by moving it to the front of the sentence (e.g., It was the boat that the guy who lived by the sea sailed in two sunny days). Here, when the verb sailed is processed, a retrieval must occur in order to restore the noun phrase the boat into active memory so that it can be integrated with the verb. We manipulated the encoding context by asking participants to remember a three-word memory list prior to reading the sentence (e.g., TABLE-SINK-TRUCK); this memory list was present for some trials (Load Condition) and not for others (No Load Condition). The manipulation of retrieval cues was accomplished by substituting the verb fixed for sailed, creating a situation where four nouns stored in memory (i.e., table, sink, truck, boat) are suitable direct objects for the verb fixed (Matched Condition), while only one is suitable for the verb sailed (Unmatched Condition). The results of reading times on the manipulated verb are shown in Figure 5-3; when there was no load present, there was no difference in reading times, however the presence of the memory words led to increased reading times when the verb was Matched as compared to when it was Unmatched. Thus, as predicted by encoding specificity, the overlap between retrieval cues generated from the verb (e.g., cues that specify “find a direct object that is fixable/sailable”) and contextual information was a strong determinant of reading performance. We note, however, that an important difference between this study and the Thomson and Tulving (1970) study is that here, the match between the cues available at retrieval and the encoding context produced a detrimental effect. This is because the similarity between the context words and the target word (i.e., table, sink, truck, and boat are all fixable) created interference at retrieval. We will discuss the role of interference in memory and language further in the section on forgetting. The important point here, however, is that encoding context has its effect in conjunction with the retrieval cues used to reaccess the encoded material. An important unresolved question pertains to the relationship between episodic memories and semantic memories. From the perspective of the multiple memories approach, these two types of memories are considered to be separate systems; however, the criteria by which a system is determined had been criticized as indecisive (e.g., Surprenant & Neath, 2009). Much of the support for separate systems comes from functional and neurological dissociations, such that tasks that are diagnostic of System A, or brain regions implicated in the healthy functioning of System A are different from those tapping into the function of System B. While dissociations are common, a number of researchers have published papers questioning their logic as a means for identifying separate brain systems (e.g., Ryan & Cohen, 2003; Van Orden, Pennington, & Stone, 2001). For example, Parkin (2001) notes that apparent dissociations observed in amnesic patients, who show unimpaired performance on standardized tests of semantic memory, but intense difficulty recalling episodic events such as a recently presented word list or lunch menu, are confounded by test difficulty. In the face of the temporally graded nature of amnesia, in which more recently acquired memories are the most susceptible to loss, the key problem with these assessments of semantic memory is that they test information that was acquired by early adult life. When semantic memory tests are carefully controlled so as to test more recently acquired semantic memories, the relative sparing of one system over the other is less apparent. While the debate on whether episodic and semantic memories are distinct systems will likely continue, from the point of view of language, it is at least helpful to distinguish autobiographical episodic memories, which are not fundamentally related to language processing, from other contextually anchored memories (e.g., Conway, 2001). The relationship between the latter type of episodic memories and semantic memory seems intrinsic—no scientist has ever claimed that individuals are born knowing the conceptual knowledge that comprises the meaning of words in the mental lexicon.1 These must be learned through experience with the world and with language. There is now a considerable body of evidence suggesting that the meaning—and grammatical usage—of individual words is learned (even by infants) through repeated learning episodes (e.g., Harm & Seidenberg, 2004; Mirkovic´, MacDonald, & Seidenberg, 2005; Sahni, Seidenberg, & Saffran, 2010). Fewer learning episodes appears to produce low quality lexical representations, characterized by variable and inconsistent phonological forms, and more shallow meaning representations, incomplete specification of grammatical function, and (for reading) underspecified orthographic representations (Perfetti, 2007). The process of consolidating individual learning events into efficiently accessed long-term memory (LTM) representations has been attested in the domain of reading by neuroimaging studies showing that repeated exposures to a word results in reduced activation in reading-related brain regions, especially areas of the ventral occipital-temporal region thought to contain visual word forms (cf. McCandliss, Cohen, & Dehaene, 2003, for a review), and in the inferior frontal gyrus (e.g., Katz, Lee, Tabor, et al., 2005; Pugh, Frost, Sandak, et al., 2008). This reduction is consistent with studies of perceptual and motor skill learning in which initial (unskilled) performance is associated with increased activation in task-specific cortical areas, to be followed by task-specific decreases in activation in the same cortical regions after continued practice (e.g., Poldrack & Gabrieli, 2001; Ungerleider, Doyon, & Karni, 2002; Wang, Sereno, Jongman, & Hirsch, 2003). Although episodic and semantic memories—both declarative memories—are generally characterized as explicit memories, in that they are accessible to conscious reporting, the process of learning that binds the two is not conscious. The ability to learn via repeated exposures engages the brain’s ability to extract statistical regularities across examples, which occurs gradually over time without conscious awareness (Perruchet & Pacton, 2006; Reber, 1989; Reber, Stark, & Squire, 1998; Squire & Zola, 1996). A number of recent studies have shown that infants as young as 8 months old are sensitive to the statistical regularities that exist in natural languages and can use them, for example, to identify word boundaries in continuous speech (Saffran, Aslin, & Newport, 1996; Sahni, Seidenberg, & Saffran, 2010) and to learn grammatical and conceptual categories (Bhatt, Wilk, Hill, & Rovee-Collier, 2004; Gerken, Wilson, & Lewis, 2005; Shi, Werker, & Morgan, 1999). Computational models that implement this learning process over a distributed representation of neuronlike nodes (i.e., connectionist models) have demonstrated that the resulting networks produce humanlike performance in language acquisition and language comprehension (e.g., Seidenberg & MacDonald, 1999), including the same types of performance errors common to children learning language and adults processing ambiguous sentences. In contrast to the earlier discussion, the traditional taxonomy depicted in Figure 5-1, suggests a clear separation between explicit and implicit memories. Historically, this reflected the need to account for certain cases of amnesia (e.g., patient H.M., Scoville & Milner, 1957) in which patients displayed increasing improvement on complex cognitive skills (i.e., game playing) with no ability to recall ever having learned to play the game or even playing it previously. The explanation afforded was that while damage to the medial temporal lobe structures destroyed the ability to access declarative memory, these patients’ nondeclarative memory (especially procedural memory), which does not depend on these brain regions was intact. This memory is characterized as implicit because patients are unaware of the learning that has taken place. Schacter and Tulving (1994) proposed two separate memory systems to account for these results: the procedural memory system (discussed later) and the PRS, comprised of a collection of domain-specific modules, which was responsible for priming results (Schacter, Wagner, & Buckner, 2000). The visual word form area, noted earlier, has been offered as one of the modules comprising the PRS (Schacter, 1992), based mainly on evidence from aphasics who show normal priming effects for the surface form of novel words, which consequently could not be stored in semantic memory (e.g., Cermak, Verfaellie, Milberg, et al., 1991; Gabrieli & Keane, 1988; Haist, Musen, & Squire, 1991; Bowers & Schacter, 1992). In addition, it has been observed that some amnesic patients can read irregularly spelled or unknown words, despite having no apparent contact with their meaning (e.g., Funnel, 1983; Schwartz, Saffran, & Marin, 1980). This has been interpreted as support for a separate word form representation independent of meaning. Of the implicit memory systems, procedural memory—memory for how to do something—has received the most attention, both in the memory and in the language domain. It has been claimed to support the learning of new, and the control of established, sensorimotor and cognitive habits and skills, including riding a bicycle and skilled game playing. As with all implicit memory systems, learning is gradual and unavailable to conscious description, however in the procedural system the outcome of learning is thought to be rules, which are rigid, inflexible, and not influenced by other mental systems (Mishkin, Malamut, & Bachevalier, 1984; Squire & Zola, 1996). Neurologically, the system is rooted in the frontal lobe and basal ganglia, with contributions from portions of the parietal cortex, superior temporal cortex and the cerebellum. The frontal lobe, especially Broca’s area and its right homologue, is important for motor sequence learning (Conway & Christiansen, 2001; Doyon, Owen, Petrides, et al., 1996) and especially learning sequences with abstract and hierarchical structures (Dominey, Hoen, Blanc, & Lelekov-Boissard, 2003; Goschke, Friederici, Kotz, & van Kampen, 2001). The basal ganglia have been associated with probabilistic rule learning (Knowlton, Mangels, & Squire, 1996; Poldrack, Prabhakaran, Seger, & Gabrieli, 1999), stimulus-response learning (Packard & Knowlton, 2002), sequence learning (Aldridge & Berridge, 1998; Boecker, Dagher, Ceballos-Baumann, et al., 1998; Doyon, Gaudreau, Laforce, et al., 1997; Graybiel, 1995, Peigneux, Maquet, Meulemans, et al., 2000; Willingham, 1998), and real-time motor planning and control (Wise, Murray, & Gerfen, 1996). From the perspective of language, one prominent proposal (Ullman, 2004) suggests that the procedural memory system should be understood as the memory system that subserves grammar acquisition and use. Implicit in this proposal is an understanding of grammar as fundamentally rule-based; an idea with a long (and controversial) history in linguistic theory (e.g., Chomsky, 1965, 1980; Marcus, 2001; Marcus, Brinkmann, Clahsen, et al., 1995; Marcus, Vijayan, Bandi, et al., 1999; Pinker, 1991). A frequently cited example is the rule that describes the past tense in English, namely, verb stem + ed. This rule allows for the inflection of novel words (e.g., texted) and accounts for the phenomenon of overgeneralizations in toddlers (e.g., Daddy goed to work). According to this view, the language-related functions of the neurological structures that support procedural memory are expected to be similar to their nonlanguage function. Thus, the basal ganglia and Broca’s area (especially BA 44) are hypothesized to govern control of hierarchically structured elements in complex linguistic representations and assist in the learning of rules over those representations. This approach is incompatible with the connectionist approach, discussed earlier, in which regularities in language are represented in distributed networks extracted through the process of statistical learning. In these models there are no rules, and indeed, one connectionist implementation specifically demonstrated that such a model could capture the rule-based behavior of past-tense assignment in a system without any rules (Rumelhart & McClelland, 1986). A number of heated exchanges between scientists on both sides of this debate have been published (e.g., Seidenberg, MacDonald, & Saffran [2002] versus Peña, Bonatti, Nespor, & Mehler [2002]; Seidenberg & Elman [1999] versus Marcus et al. [1999]; Keidel, Kluender, Jenison, & Seidenberg [2007] versus Bonatti, Peña, Nespor, & Mehler [2005]) with each side pointing to significant empirical results in support of their position. What is important for our current purpose is the conclusion that there need not be a separable declarative memory system to support grammar processing, as viable non–rule-bound systems have demonstrated that statistical learning over examples held in declarative memory can produce a network with the necessary knowledge held in a distributed representation. Even Ullman (2004) seems to acknowledge the difficulty of distinguishing between the separate declarative and procedural systems he proposes, as he states that the same or similar types of knowledge can in some cases by acquired by both systems. What appears to be more critical, as revealed by the statistical learning approach, and especially studies of language acquisition (e.g., Saffran et al., 1996), is the ability to identify and make use of cues in order to learn about the regularities in language. Indeed, a central claim of the connectionist approach is that the cues that facilitate language acquisition in infants become the constraints that govern language comprehension in adults (Seidenberg & MacDonald, 1999). As we discuss the memory mechanisms that support comprehension in the sections later, cues will again arise as an important determinant of successful language use. The construct of WM as a separate store for temporarily held information is an outgrowth of the two-store memory taxonomy, which has been termed the Modal Model (Murdock, 1974) after the statistical term mode, because its influence became so pervasive during the last half of the twentieth century. Indeed, even in 2010 it figures prominently in many cognitive and introductory psychology textbooks. This model featured a short-term memory (STM) store characterized by a limited capacity in which verbal information could be held for very short durations, but only if constantly rehearsed via active articulation. This is in contrast to the LTM store, which corresponds roughly to the semantic, episodic and procedural memory systems discussed earlier, which is assumed to have an unlimited capacity and duration, so long as appropriate retrieval cues are present to restore passive memories into conscious awareness. The most frequently cited presentation of this model is that of Atkinson and Shiffrin (1968), illustrated in Figure 5-4, which also included a third store for sensory information, subdivided into separate registers for visual, auditory, and haptic information. The modal model emphasized both the qualitative differences between different memory types but also the processing mechanisms of each and the way they interact. Inspired by the nascent computer metaphor of the 1950s, this model embodied a specific algorithm through which fleeting sensory information was transformed into a lasting memory. In particular, research demonstrating the highly limited duration of sensory information (1–3 seconds; Sperling, 1960) suggested that it was necessary for information to be verbally recoded, and also rehearsed, in order to be maintained, and this occurred in the short-term store. Once information had received a sufficient amount of rehearsal in STM, it would move into LTM, where it would reside in a passive state until retrieved back into STM where it would be restored into consciousness. Thus, STM is the gateway to and from LTM—any information entering LTM must go through STM and whenever information is retrieved it must again enter STM. (It should be noted that original information is not really transferred, but rather copied from one store to another.) At the same time, STM represented a considerable bottleneck for cognitive activity, as it too was found to have a limited storage capacity, made memorable by George Miller’s (1956) famous report entitled “The Magical Number Seven, Plus or Minus Two.” Miller arrived at this estimate after reviewing data from a number of different paradigms in which individuals were presented with the task of learning new information, only to show highly limited recall on lists containing more than 8 items. Thus, as new information entered STM, some old information becomes lost through displacement—especially information that was not actively rehearsed. Further research revealed that it was possible to expand the capacity of STM via a process called chunking, in which meaningful pieces of information are grouped together into a single unit (i.e., the numbers 1, 4, 9, and 2 are remembered as the single unit 1492); however, a limit on the number of chunks that could be actively maintained remains restricted to 3–5 items (cf. Cowan, 2001, for a detailed review). The centrality of STM motivated the development of models that more precisely articulate how information is brought in and out of consciousness during the performance of cognitive tasks. It is this workspace of active information that has been termed Working Memory—the most influential version of which is the model proposed by Alan Baddeley and colleagues (e.g., Baddeley & Hitch, 1974; reviewed in Baddeley, 2003), depicted in Figure 5-5.2 The Working Memory model fractionated STM into a set of systems that separately characterized processing and storage; in fact, it was evidence from neuropsychological damage that emphasized the problems with a unitary STM, as patients with severe damage to STM nevertheless retained the ability to access LTM during complex cognitive tasks (Shallice & Warrington, 1970). The key and, ironically, least understood component of the Working Memory model is the Central Executive, which is the controlling mechanism through which information from three subsidiary “slave” storage systems (depicted as gray boxes in Figure 5-5) is brought in and out of the focus of attention (Baddeley, 2003). It is responsible for (at least) updating, shifting, and inhibiting information (Miyake et al., 2000) and has its neurological locus in the frontal lobes, especially dorsolateral prefrontal regions (BA 9/46) and inferior frontal regions (BA 6/44), with some parietal extension into (BA 7/44) (e.g., Braver et al., 1997; Cohen et al., 1997). The three slave systems can be distinguished by their type of encoding, or the type of information they process. The visuospatial sketchpad is responsible for visuospatial information (e.g., images, spatial configuration, color, shape) and is fractionated into the visual cache (storage) and the inner scribe (rehearsal) components. The more recently postulated episodic buffer (Baddeley, 2000) is responsible for allowing information from LTM to interact with the other two slave systems to create multimodal chunks that are open to conscious examination. This buffer should not be confused with episodic memories, discussed earlier, as those are part of LTM while chunks created in Baddeley’s episodic buffer are merely temporary associations between different types of information simultaneously manipulated by the central executive. A limit on the amount of information held in this buffer comes from the computational complexity of combining multiple types of codes into a single representation (Hummel, 1999). The third “slave system,” the phonological loop, is the most theoretically developed and experimentally attested. It is responsible for phonological encoding and rehearsal—the means through which verbal information is maintained in an active state. The psychological reality of this process was demonstrated in a number of important early experiments (e.g., Baddeley, 1966; Conrad, 1964; Wickelgren, 1965). For example, Murray (1967) developed a technique to prevent participants from utilizing inner speech to recode information, which became known as articulatory suppression. While given a list of words to remember, participants were required to say the word “the” over and over, out loud. When words in the list were similar sounding (i.e., man, mad, cap, can, map) recall errors in the memory condition without articulatory supression reflected acoustic confusions: participants were more likely to incorrectly recall items that sounded like the target items but that were not actually in the memory list. With articulatory suppression, on the other hand, acoustic errors were no longer more likely, suggesting that the speaking task prevented participants from recoding, or rehearsing, the memory words using inner speech. These results suggest that not only is information encoded acoustically, but that the amount of information that can be maintained is limited by the ability to actually articulate it—as the number of items to remember increases, some will be forgotten because they cannot be rehearsed. The exact capacity limit for the phonological loop has been quoted as being the amount of information that can be articulated in about 2 seconds (Baddeley, 1986; Baddeley, Thomson, & Buchanan, 1975). Neurologically, lesion studies and neuroimaging methods implicate the left temporoparietal region in the operation of the phonological loop, with BA 40 as the locus of the storage component of the loop and Broca’s area (BA 6/44) supporting rehearsal (reviewed in Vallar & Papagno, 2002, and Smith & Jonides, 1997). The notion that WM capacity is fixed has had a huge influence on theories of language processing. For example, it is a well-replicated finding that sentences in which grammatical heads are separated from their dependents are more difficult to process than when heads and dependents are adjacent (e.g., Grodner & Gibson, 2005; McElree, Foraker, & Dyer, 2003). This is true of unambiguous sentences (e.g., The book ripped. versus The book that the editor admired ripped.) and of ambiguous sentences (e.g., The boy understood the man was afraid. versus The boy understood the man who was swimming near the dock was afraid.), where reanalyses prove more difficult as the distance between the ambiguity and the disambiguating material is increased (e.g., Ferreira & Henderson, 1991; Van Dyke & Lewis, 2003). A number of prominent theories have attempted to account for these results by invoking WM capacity, with the common assumption being that capacity is exhausted by the need to simultaneously “hold on to” the unattached constituent (the grammatical subjects book and man in these examples) while processing the intervening material until the main verb (ripped or was afraid) occurs. The chief question is taken to be “how much is too much” intervening material before capacity is exhausted; some have suggested that the relevant metric is the number of words (Ferriera & Henderson, 1991; Warner & Glass, 1987) or discourse referents (Gibson, 1998; 2000). Others have focused on the hierarchical nature of dependencies, suggesting that difficulty depends on the number of embeddings (Miller & Chomsky, 1963), or the number of incomplete dependencies (Abney & Johnson, 1991; Gibson, 1998; Kimball, 1973). This focus on capacity has also spawned a large body of research seeking to demonstrate that sentence comprehension suffers when capacity is reduced either experimentally through the use of dual-task procedures (e.g., Fedorenko, Gibson, & Rohde, 2006, 2007) or clinically, as when poorly performing participants also score poorly on tests of WM capacity, compared with those who do well. For example, King and Just (1991) found that college-level readers with “low” WM capacity showed worse comprehension and slower reading times on syntactically complex sentences than those with “high” or “middle” capacity levels. Similarly, MacDonald, Just, and Carpenter (1992) found that low capacity individuals from the same population had more difficulty interpreting temporarily ambiguous constructions than those with larger capacities. They suggested that this was because a larger WM capacity enabled readers to maintain all possible interpretations for longer, while the smaller capacity readers could only maintain the most likely interpretation. In cases where the ultimately correct interpretation was not the most likely one, low capacity readers would fail to comprehend because the correct interpretation had been “pushed out” of memory. Studies of reading development also point to an association between low WM capacity and poor comprehension. In a longitudinal study of children with normal word-level (i.e., decoding) skills, Oakhill, Cain, and Bryant (2003) found that WM capacity predicted significant independent variance on standardized measures of reading comprehension at age 7–8 and again 1 year later. Further, Nation, Adams, Bowyer-Crane, and Snowling (1999) found that 10–11 year old poor comprehenders had significantly smaller verbal WM capacity (though not spatial WM capacity) than normal children matched for age, decoding skill, and nonverbal abilities. Likewise, reading disabled children have been found to score in the lowest range on tests of WM capacity (e.g., Gathercole, Alloway, Willis, & Adams, 2006; Swanson & Sachse-Lee, 2001), and these scores are significant predictors of standardized measures of both reading and mathematics attainment. In all these studies, the standard means of measuring WM capacity is via tests referred to as complex span tasks (e.g., Turner & Engle, 1989; Daneman & Carpenter, 1980).3 The Reading/Listening Span version of these tasks requires participants to read or listen to an increasingly large group of sentences, and report back only the last words of each sentence in the set. The task of processing the sentence (and in some cases answering questions about it) provides a processing component that, together with the requirement to store the last words, is thought to provide an assessment of the efficiency with which the central executive can allocate resources to both maintain and process linguistic information. Indeed, the task mirrors the functional demand of processing complex linguistic constructions (e.g., long-distance dependencies) mentioned earlier, where substantial information is situated in between two linguistic constituents that must be associated. A meta-analysis of 77 studies found that the Reading Span task predicted language comprehension better than simple span tasks (e.g., digit span) in which participants simply had to remember and report back lists of words (Daneman & Merikle, 1996). While the impact of the Working Memory model on the study of language processing is undeniable, a close examination of the model reveals that it is not well matched to the functional demands of language comprehension (Lewis, Vasishth, & Van Dyke, 2006). For example, to process the types of sentences discussed earlier (The book that the editor admired ripped.), it is argued that the noun phrase the book must be held active in WM while the subsequent information is processed, and the difficulty associated with this is what makes the sentence difficult to process. Yet it seems clear that, even when not processing intervening information (The book ripped.), there would simply be no time to actively rehearse previously processed constituents during real-time comprehension, where grammatical associations must be made within a few hundred milliseconds (Rayner, 1998). In addition, it seems logical that language comprehension in patients with brain damage should be significantly limited when WM spans are reduced, yet such a relation has failed to materialize, whether span is measured in terms of traditional serial recall measures (Caplan & Hildebrandt, 1988; Martin & Feher, 1990) or in terms of reading span (Caplan & Waters, 1999). Moreover, the emphasis on Reading/Listening span as an index of WM capacity further complicates the issue, as the format of the task in which participants must switch between list maintenance and language comprehension evokes conscious executive processes that are not part of normal comprehension. Consequently, it is unclear whether a participant classified as having a “Low Working Memory Span” actually has a smaller memory capacity, a slower processing speed, difficulty with attention switching, or some combination of these. Next, we discuss further problems with the capacity view itself and then return to the issue of the type of memory model that might better support language processing. Despite its wide acceptance, the empirical support for a separate, fixed-capacity temporary storage system (either STM or WM) is weak. The main evidence in support of separable systems comes from neuropsychological double dissociations, where patients who show severely impaired LTM present with apparently normal STM, and vice versa (e.g., Cave & Squire, 1992; Scoville & Milner, 1957; Shallice & Warrington, 1970). At issue is the role of the medial temporal lobes (MTL) in STM tasks. Recall from our previous discussion that these structures are crucial for the creation and retrieval of long-term declarative memories, so if LTM were entirely distinct from STM, then the prediction is for no MTL involvement in creating STMs or in performing STM tasks. A number of studies have recently cast doubt on whether the double dissociation actually exists, however, showing MTL involvement in short-term tasks (Hannula, Tranel, & Cohen, 2006; Nichols, Kao, Verfaellie, & Gabrieli, 2006; Ranganath & Blumenfeld, 2005; Ranganath & D’Esposito, 2005). Another source of evidence raising questions about the separability of the two types of memory is data suggesting that representations assumed to be in WM are not retrieved in a qualitatively different manner than those in LTM. Recent fMRI studies indicate that the retrieval of items argued to be within WM span recruit the same brain regions as retrieval from LTM, notably the left inferior frontal gyrus (LIFG) and regions of the medial temporal lobe (MTL) (Öztekin, Davachi, & McElree, 2010; Öztekin, McElree, Staresina, & Davachi, 2008). These imaging results align with behavioral investigations of experimental variables diagnostic of the nature of retrieval process, such as manipulations of recency and the size of the memory set (Box 5-1). Contra long-standing claims that information in WM is retrieved with specialized operations (e.g., Sternberg, 1975), the retrieval profiles observed have consistently shown the signature pattern of a direct-access operation, the same type of retrieval operation thought to underlie LTM retrieval. In this type of operation, memory representations are “content-addressable,” enabling cues in the retrieval context to make direct contact to representations with overlapping content, without the need to search through irrelevant representations. We take up this discussion further later.

The role of memory in language and communication

Types of memory

Multiple memory systems

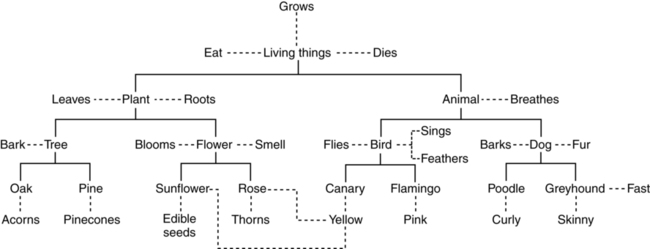

Semantic memory

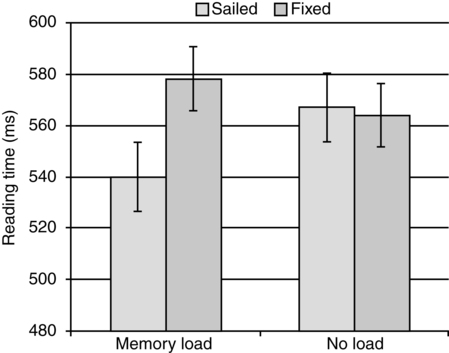

Episodic memory

Nondeclarative memory

Procedural memory

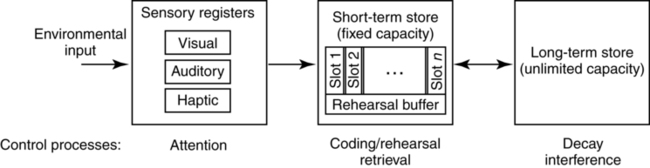

Working memory

Working memory and language comprehension

Problems with the capacity view

![]()

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree

The role of memory in language and communication