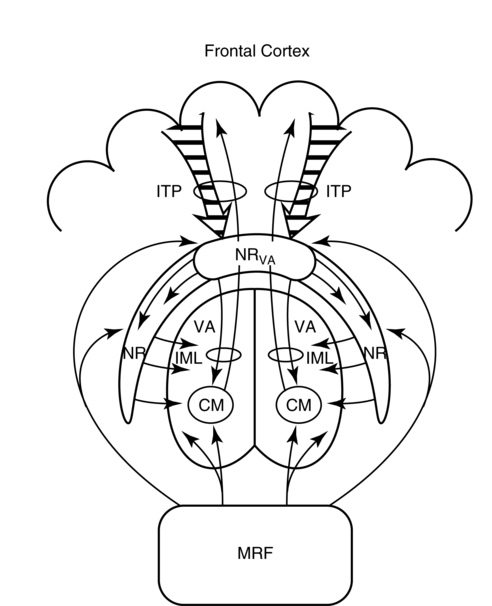

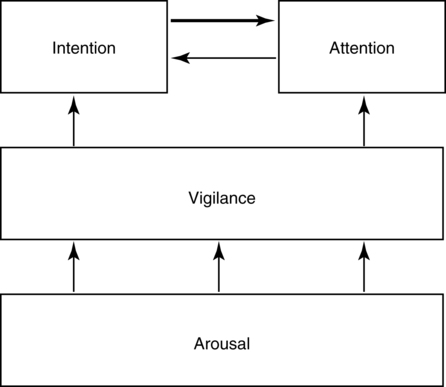

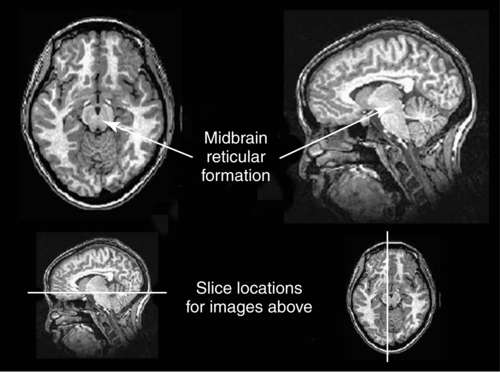

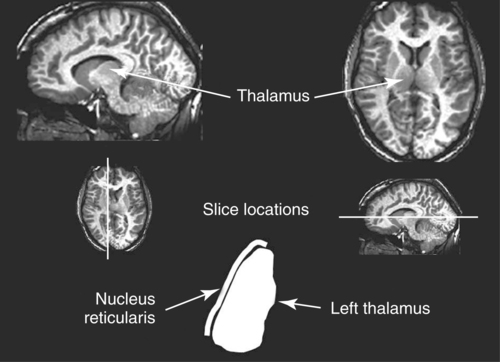

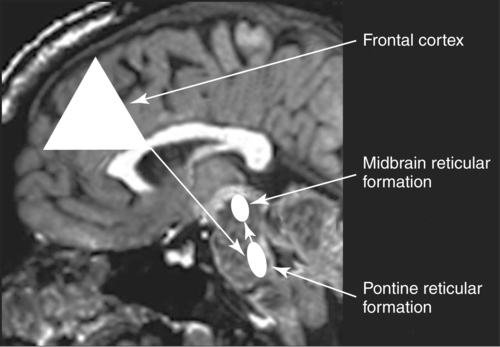

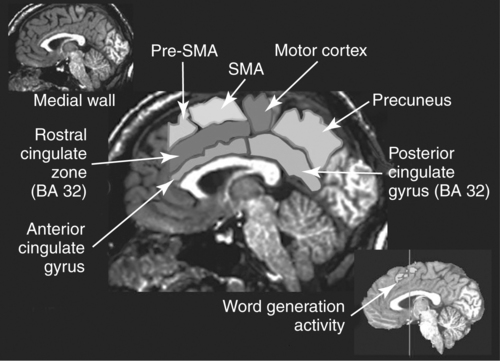

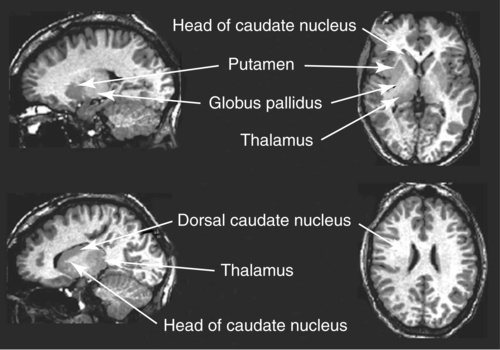

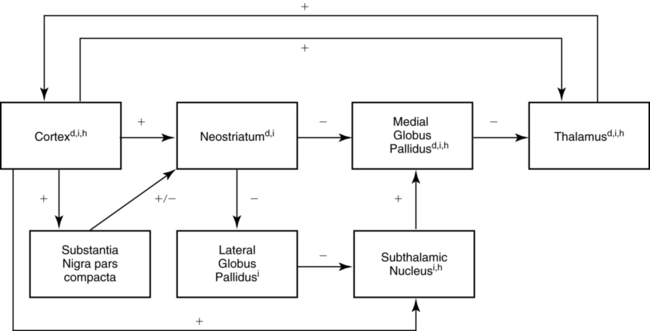

CHAPTER 8 Bruce Crosson and Matthew L. Cohen For example, consider having a conversation in a crowded room with many others talking around you. There may be three, or four, or even five conversations within earshot on which you might focus. What allows you to focus on the speech with the specific person to whom you are talking? That ability lies within the realm of attention (which we will define formally shortly). Put simply, we filter out the irrelevant sources of information (i.e., other conversations) using attention mechanisms and focus on the one source of information important to us. Why must we focus on a single source of information to the exclusion of others? The answer is that the brain system we use for language comprehension has a limited capacity in the amount of incoming linguistic information it can process at one time. These capacity limitations lie within the realm of working memory. Working memory can be defined as the ability to hold items in one’s immediate grasp so that they can be used for other processes (e.g., comprehension of a communication). It is widely accepted that the number of items that can be held in working memory is limited. Attempts to quantify working memory capacity go at least as far back as the work of Miller (1956), but research has continued in this topic (Cowan, 2001). It is not our purpose to elaborate on working memory and its capacity. It suffices to note that limited capacity of processing resources constitutes a bottleneck in processing through which only a limited amount of information can pass. What concerns us here are the processes that keep a particular resource from being overwhelmed by more information than it can handle, which would lead to a deterioration in processing. Now that we have discussed the assumption of limited capacity for processing incoming information or for executing actions, we can turn to more formal definitions of the components of attention. Posner and Boies (1971) broke attention down into three components: arousal, vigilance, and selective attention. Work reviewed by Heilman and colleagues (Heilman, Watson, & Valenstein, 2003), however, suggests that the selective attention component can be further divided into separate intention and attention processes. Arousal and vigilance are basic states supporting more complex forms of attention (Figure 8-1). Arousal refers to a physiological state underlying a general readiness to act or to receive and process incoming information. It can be contrasted with sleep or coma, states in which the organism is ready neither to act nor to receive and process incoming information. Arousal can be thought of as having degrees, and the greatest cognitive efficiency is assumed when one is neither hyperaroused nor hypoaroused. Since arousal supports vigilance, intention, and attention, these other forms of attention are affected when arousal is above or below optimal levels. We touched on intention and attention earlier. A good place to begin this elaboration regarding these two forms of attention is with the observation of the Russian neuroanatomist Vladimir Alekseyevich Betz that the brain is basically an elaboration of the spinal cord (Betz, 1874). That is, the anatomic organization of the spinal cord (motor functions in the anterior portion and sensory functions in the posterior portion) is recapitulated in the brain. Put another way, the anterior telencephalon (frontal lobes) is concerned with the planning and execution of action and the posterior telencephalon (temporal, parietal, and occipital lobes) is concerned with the sensation and perception of internal and external information. Although this idea is somewhat of an oversimplification, it is useful in conceptualizing brain organization (e.g., see Fuster, 2003). Intention can be thought of as the action equivalent of attention, that is, the ability to select one among many potential actions for execution and to initiate that action. Hence, intention governs what actions are performed; therefore, intention mechanisms regulate a good deal of the information and action processing in anterior cortices. In the realm of language, if you were asked to name one kind of bird, you would have to select one to say among the dozens of kinds of birds you know. Another example would be choosing the best sentence structure to state an idea from among several potential structures. Intention also has been called “executive attention” by Fuster (2003), but the concept is essentially the same as that of intention. To this point, we have discussed intention and attention as if they were entirely separate mechanisms. However, as we negotiate our surroundings in everyday life, intention and attention mechanisms influence each other. This interaction is represented in Figure 8-1 by the arrows between intention and attention mechanisms. Indeed, an important maxim is that what we intend to do determines to what we attend. For example, if I intend to pour a cup of coffee, I must attend to the location of the cup. From a communication standpoint, if I intend to carry on a conversation with you, I must attend to what you are saying. Nadeau and Crosson (1997) referred to this kind of attention as intentionally guided attention. It is represented by a somewhat larger arrow in Figure 8-1 because our intentions so commonly determine the items to which we attend. However, it is also true that attention can affect intention. For example, suppose you were at a baseball game and you notice that the ball has been hit into the stands right at you. Because of the impending danger, the ball will capture your attention, and you will take evasive action. As an example relevant to communication, imagine you are at a party talking to a friend and someone calls your name. The stimulus of someone calling your name will temporarily capture your attention and you will orient to that stimulus so that you can determine who is calling you and if any further action is necessary. Before proceeding to a discussion of neural substrates, one further observation should be made regarding the nature of intention and attention mechanisms. To this point, our discussion about capacity limitations has revolved around the limited capacity within modality. For example, we noted that our processing of language is limited by the amount of information we can hold in our immediate attention (i.e., working memory). McNeil and his colleagues have studied the interface between attention and language, using dual task paradigms where either linguistic or nonlinguistic tasks compete with linguistic tasks (for a review, see Hula & McNeil, 2008). In general, the literature indicates that when linguistic and nonlinguistic tasks are performed almost simultaneously, they can interfere with each other, suggesting that they are competing for utilization of mechanisms common to both. For example, identifying a tone as high or low can interfere with picture naming. The usual interpretation is that the tasks are competing for utilization of attention (or intention) mechanisms or resources. Hence, simultaneous performance of tasks that require different kinds of processing may interfere with each other, for example, talking on a cell phone and driving a car. Finally, it is worth noting that Hula and McNeil (2008) attribute symptoms of aphasia to impairment in such attention mechanisms. We do not subscribe to this viewpoint, believing instead that impaired linguistic, attention, and intention mechanisms contribute to the symptoms of various aphasia syndromes. Although further discussion of our differences in frameworks is beyond the scope of this chapter, it is worth reading Hula and McNeil’s (2008) review for a different viewpoint. Since Moruzzi and Magoun (1949) demonstrated the role of the brainstem reticular formation, and particularly its midbrain component (Figure 8-2), in arousal, the midbrain reticular formation has been assumed to play a key role in arousal. However, since that time, it has been shown that arousal is affected by other structures as well, such as the thalamus (Figure 8-3). Sherman and Guillery (2006) reviewed the literature indicating that thalamic rhythms have a role in arousal. In the state of sleep, for example, thalamic relay cells reside in mode of rhythmic bursting, where fidelity of information transfer to the cortex is low. In the waking state, thalamic neurons are often in a single-spike, high fidelity mode of information transfer, where the output and input show good correspondence. The nucleus reticularis is a thin shell of neurons surrounding the lateral and anterior aspects of the thalamus in which corticothalamic and thalamocortical axons give off collaterals (Figure 8-3). The main inhibitory (GABAergic) target of cells in the nucleus reticularis are cells in the various thalamic nuclei. The firing mode of cells in the nucleus reticularis appears to affect the mode (burst versus single-spike) of thalamic relay nuclei. In turn, the midbrain reticular formation projects to the nucleus reticularis. Many of the profound effects of the midbrain reticular formation on arousal and, in turn, on intention and attention, are thought to be mediated by these connections at the thalamic level (Heilman et al., 2003). Finally, Heilman et al. (2003) have noted that specific cholinergic pathways involving the midbrain reticular formation may be involved in arousal. The neurobiology of vigilance has not received as much attention in the literature as the neurobiology of arousal. However, it is clear that adequate arousal is necessary for vigilance. Hence, damage to or dysfunction in any of the arousal mechanisms mentioned earlier will lead to problems in vigilance. Indeed, subtle problems in arousal may be manifested as disturbances in vigilance. Further, sustaining intention or attention over time requires the vigilance to do so. As a result, it is likely that frontal mechanisms involved in intention (see later) are also involved in vigilance. Frontopontine fibers, for example, are known to reach the vicinity of the pontine reticular formation (Parent, 1996), where they could affect the ascending reticular formation and modulate or help to sustain arousal (Figure 8-4). The anatomy of intention (and attention) has been discussed by Heilman and colleagues (2003). As noted earlier, intention mechanisms regulate processing in the anterior cortices. Medial frontal cortices are known to be involved in the intention aspects of language, and damage to these cortices results in akinetic mutism (Barris & Schuman, 1953; Nielsen & Jacobs, 1951), a syndrome where language expression (and other activities) are initiated only with externally guided (exo-evoked) stimuli, such as very significant urging by an examiner or other person interacting with the patient. The supracallosal medial frontal cortex (Figure 8-5) can be divided into the anterior cingulate gyrus, the rostral cingulate zone, the supplementary motor area (SMA), and the pre–supplementary motor area (pre-SMA). Based on our research in word production, the portion of this cortex at the junction of pre-SMA and the rostral cingulate zone seems to be most important for word finding (Crosson, Sadek, Bobholz, et al., 1999; Crosson, Sadek, Maron, et al., 2001; Crosson, Benefield, Cato, et al., 2003). The basal ganglia (Figure 8-6) are also involved in the intentional aspects of language, though their influence may be more subtle. These influences seem to be regulated by cortical-basal ganglia-cortical circuits (Figure 8-7) that are connected primarily, though not exclusively, to the frontal lobes (Alexander, DeLong, & Strick, 1986; Middleton & Strick, 2000). Crosson and colleagues (Crosson, Benjamin, & Levy, 2007) have adapted models of movement (Gerfen, 1992; Mink, 1996; Penney & Young, 1986) to explain empirical data regarding the participation of the basal ganglia in language (Copland, 2003; Copland, Chenery, & Murdoch, 2000b; Crosson et al., 2003). The essentials of the model are that the basal ganglia enhance actions selected for execution (see Figure 8-7) and suppress alternative actions competing with the selected action (see Figure 8-7). In other words, the basal ganglia can be thought of as increasing the signal-to-noise ratio for a given output (i.e., behavior) (Kischka et al., 1996). Nambu and colleagues (Nambu, Tokuno, & Takada, 2002) have proposed that the basal ganglia also reset (clear) the system to allow switching from one action to another (see Figure 8-7). The effects of basal ganglia damage or dysfunction on language are more subtle than those of medial frontal damage. It is well established now that basal ganglia damage alone does not cause aphasia (Hillis, Wityk, Barber, et al., 2002; Nadeau & Crosson, 1997). Thus, patients with Parkinson disease or basal ganglia lesions might demonstrate deficits in aphasia batteries only for the most difficult tasks, like naming low frequency items or word fluency (i.e., naming as many items as possible beginning with a given letter or belonging to a given semantic category) (Copland, Chenery, & Murdoch, 2000a). However, the effects of Parkinson disease or basal ganglia lesion are seen on more complex language tasks, such as defining words or stating two concepts for an ambiguous sentence. The effects of medial frontal damage would be more pervasive than those of Parkinson disease or basal ganglia lesion because of the patient’s inability to initiate their own responses after medial frontal damage. A final distinction regarding intention should be made. That is the difference between endo-evoked and exo-evoked intention (Heilman et al., 2003). Endo-evoked intention refers to the selection and initiation of action (including cognitive actions) based on some internal motivation or state, while exo-evoked intention refers to behavior that is evoked by external stimulation. The akinesia (lack of or decreased movement) seen in patients with Parkinson disease can be thought of as a disorder of endo-evoked intention; Parkinsonian patients have difficulty initiating behavior based on internal thoughts or motivations. However, if a strong external stimulus is applied (e.g., if someone were to shout “fire”), the difficulty initiating action (e.g., jumping up and moving away from danger) is mitigated. As noted earlier, attention governs which sources of information are selected for further processing in the posterior cortices. The medial posterior cortices (posterior cingulate/precuneus region, Figure 8-5) and the parietal lobe play a large role in attention (Heilman et al., 2003). The effects of parietal lesions on attention often have hemispatial consequences on the side of space opposite of the lesion. It has been shown, for example, that patients with parietal lesions might perform language tasks better when stimuli are presented in their ipsilesional hemispace (Coslett, 1999). Thalamic nuclei also play a crucial role in attention (Sherman & Guillery, 2006). Essentially, this mechanism involves the firing modes in which thalamic neurons reside, as discussed earlier. There is a high fidelity mode of information transfer from the periphery (single spike mode) for attended to items and a low fidelity mode of information transfer (burst mode) for unattended items. These authors have speculated that even cortico-cortical operations might be governed by similar mechanisms. As noted earlier, intention and attention systems interact constantly during our everyday existence. Nadeau and Crosson (1997) called this relationship between the two systems intentionally guided attention, and they posited an anatomic mechanism (Figure 8-8).

Language and communication disorders associated with attentional deficits

Arousal, vigilance, intention, and attention mechanisms: the basics

Neural substrates of arousal, vigilance, intention, and attention

![]()

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree

Neupsy Key

Fastest Neupsy Insight Engine

cortex (structures with a superscripted “d”). The indirect subloop includes cortex → neostriatum → lateral globus pallidus → subthalamic nucleus → medial globus pallidus → thalamus

cortex (structures with a superscripted “d”). The indirect subloop includes cortex → neostriatum → lateral globus pallidus → subthalamic nucleus → medial globus pallidus → thalamus  cortex (structures with a superscripted “i”). Structures in the hyperdirect subloop include cortex → subthalamic nucleus → medial globus pallidus → thalamus

cortex (structures with a superscripted “i”). Structures in the hyperdirect subloop include cortex → subthalamic nucleus → medial globus pallidus → thalamus  cortex (structures with a superscripted “h”). The “+” indicates the excitatory neurotransmitter glutamate, the “−” indicates the inhibitory neurotransmitter GABA, and the “±” indicates dopamine, which has a net facilitation effect on neostriatal neurons projecting into the direct subloop and a net suppression effect on neostriatal neurons projecting into the indirect subloop.

cortex (structures with a superscripted “h”). The “+” indicates the excitatory neurotransmitter glutamate, the “−” indicates the inhibitory neurotransmitter GABA, and the “±” indicates dopamine, which has a net facilitation effect on neostriatal neurons projecting into the direct subloop and a net suppression effect on neostriatal neurons projecting into the indirect subloop.