(1)

Hand Surgery Department of Clinical Sciences, Malmö Lund University Skäne University Hospital, Malmö, Sweden

Abstract

A mind-controlled artificial hand is a good solution for improving quality of life for many amputees. However, considering the extremely well-developed motor and sensory functions of the human hand, developing a useful alternative is an enormously challenging task. The ideal hand prosthesis should be capable of intuitively executing the delicate movements and precision grips that we use daily at work and at leisure. It should possess sensory functions to ensure a feeling of embodiment, and it should provide sensory feedback for regulation of grip strength. It should also provide tactile discriminative functions. However, despite advanced technological achievements in the prosthetic field, only some of these aspirations have been fulfilled. The prosthetic hands that are available on the market today are controlled by EMG signals from the forearm muscles, and their function is limited to little more than opening and closing the hand. Various principles for providing them with sensory functions are currently being tried in many centres, and refined motor functions are being achieved by using computerised systems for pattern recognition, associating specific patterns of myoelectric signals with specific movements of the hand. In experimental studies and clinical trials on completely paralysed patients, recordings from needle electrodes, implanted into motor areas of the brain, have been successfully used to control movements in artificial arms and hands. In the future the function of mind-controlled robotic hands will presumably come much closer to the function of a human hand.

Substituting an artificial hand for a missing hand has long been a well-known concept. Cosmetic prostheses with the appearance of normal hands were used by the Egyptians thousands of years ago. Various types of hand prostheses were constructed during the sixteenth and seventeenth centuries that were able to open and close with help from the healthy hand, and various types of spring mechanisms were used to give a firm grip, for instance, around the hilt of a sword. There is a story about a German knight called Götz von Berlichingen who, at the age of 24, lost his right hand in battle. Götz constructed an iron hand with movable fingers so he could grip a sword and a lance. With his 1.4 kilo heavy iron hand, he continued to actively serve on the battlefield for 40 more years. He felt that the iron hand worked at least as well in battle as his real hand. Today Götz’s iron hand is on display at a museum in Nuremberg.

In recent times, interest in developing hand and arm prostheses has been quite limited, and for a long time cosmetic prostheses, or simple hooks controlled by shoulder movements, were the only prostheses available. The number of people with amputations at the arm and forearm levels has been limited in comparison to patients with lower limb amputations, as diabetes often makes it necessary to amputate a leg below knee level. However, the thalidomide disaster in the late 1960s and early1970s created an evolving interest in constructing artificial hands [1–5].

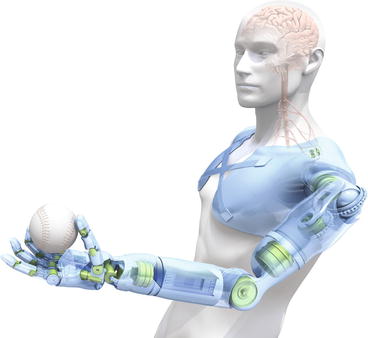

A functional artificial hand for amputees must fulfil certain requirements. It should be based on a mechanical construction that allows movements and grips that are useful for the amputee, and the hand’s performance should be intuitively controlled by the user’s mind. The user’s thoughts and intentions should be automatically transformed into movements of the prosthesis. This vision represents an enormous biomechanical and neurobiological challenge (Fig. 16.1) [6].

Fig. 16.1

Vision of a mind-controlled hand/prosthesis as displayed in National Geographic in January 2010 (Illustration: Bryan Christie)

In movies and computer games, it is easy to be impressed by how easily advanced robotic hands can mimic the function of normal hands and how easily they can be repaired or replaced if needed. But in reality we have a long way to go before reaching such a goal. Making a mind-controlled hand move and function intuitively based on our thoughts requires some kind of functional connection between the prostheses and the nervous system – a man–machine interface. In theory there are various possibilities for achieving this goal. A limited functional connection can be established with the brain by recording brain activity using electrodes on the outside of the skull, like in EEG examinations. However, a more precise and accurate recording of brain activity requires the use of thin needle electrodes implanted directly into the brain. This technique has frequently been used in animal experiments, and such a direct connection between the brain and a hand prosthesis, using advanced control algorithms for pattern recognition, has also been used in a small number of totally paralysed patients.

A simpler alternative to establish contact with the nervous system would be to place recording electrodes around or inside a nerve trunk in the residual arm of the amputee and to use the electrical activity in the nerve to control movements in the hand prosthesis via cables penetrating the skin. However, this technique is associated with several biological and mechanical problems. A far more attractive concept, which today is routinely used in all commercially available mind-controlled prostheses, is to record and utilise the electrical signals generated by muscle activity in the residual forearm, so-called EMG (electromyographic) signals. Even if the hand is amputated, it is still possible to imagine movements in the missing hand [7]; the motor area of the brain is activated, and electrical signals are transmitted to those muscles in the forearm that would normally regulate hand movements.

Controlling an Artificial Hand Through Direct Recordings from the Brain

The principle of controlling an artificial hand using recordings directly from the brain was clinically tested in order to create communication with the outside world for totally paralysed patients with ‘locked-in syndrome’, a total isolation from the outer world because of a spinal injury close to the brain or a severe neurological disease [8–19]. In 2000, there were reports of three patients with ‘locked-in syndrome’ where fine needle electrodes were implanted in the brain to record the electrical activity in specific groups of motor nerve cells [10]. The subjects were able to voluntarily raise and lower a cursor sweeping horizontally across a computer screen, thus making the cursor hit a vertical row of letters on the right edge of the screen. After training, the paralysed patients were able to hit specific letters and spell out words. Among the first words that one of the patients spelled were the names of two of the scientists who had made it possible for him to communicate with the outside world for the first time, the sequence of letters being ‘KENEDY GQLDXWAIJTEF’ (Kennedy, Goldwaithe) [10].

From the beginning, the objective in these trials was to record signals from individual nerve cells, but it was soon realised that an array containing a large number of microelectrodes gave the best results [8, 19–21]. Miguel Nicolelis at Duke University in Durham, North Carolina, is a pioneer when it comes to transforming thoughts into activities and movements in artificial extremities, using microelectrode arrays introduced into the brain [20, 21]. His early laboratory experiments involved implanting microelectrodes into the motor cortices of monkeys [22–25]. The signal patterns that were generated when the monkey performed simple trained movements in the hand and arm were recorded by the electrode arrays and were processed in an artificial neural network, which learned to associate specific signal patterns with specific arm movements. The system was connected to a robot arm that could then perform the corresponding movements simultaneously with the monkey’s own movements: as soon as the monkey initiated an arm movement, the associated signal pattern had already been registered and interpreted by the computer, and an identical movement was simultaneously performed by the robot arm.

The monkey, Belle, became a pioneer in these trials when, one afternoon in the spring of 2000, she was able to voluntarily control movements not only in a robotic arm positioned in the room beside her but also in another robotic arm in a laboratory more than 100 miles away via wireless transfer [24]. The experiments were performed at Duke University, but by linking the computers together with the Laboratory for Human and Machine Haptics in Cambridge, Massachusetts, the thoughts generated in the monkey’s brain were able to induce identical and simultaneous movements in the robotic arms in both places.

It has even proved possible to bypass the monkey’s thoughts and to ‘read’ the activities already generated in the brain’s ‘planning centre’ in the premotor cortex, where decisions are made to perform specific movements. In this way the robotic arm performed the movements before the movements were actually performed by the monkey’s arm [26]. In 2008 Schwartz and colleagues in Pittsburgh reported a new generation of trials where, after needle electrodes were implanted in their motor cortices, monkeys could be trained to perform advanced natural movements in a robotic arm in three dimensions [27].

The same principle has been applied on a small scale in patients with ‘locked-in syndrome’. In the BrainGate Project, Hochberg and colleagues in Massachusetts and Boston introduced a 4 × 4 mm array of close to 100 microelectrodes in the motor cortex of a human with a long-standing tetraplegia subsequent to a brain stem stroke, unable to move any muscles in his upper or lower extremities. The signal patterns that were generated by the patient’s thoughts were decoded in advanced pattern-recognition systems and could be translated into several various activities like opening email, playing computer games and even initiating movements in the hand prosthesis, functions that were well preserved after follow-up 1,000 days after implantation [28, 29]. In an analogous case Schwartz, Collinger and colleagues in Pittsburgh, Pennsylvania, recently found that the participant was able to control and move the prosthetic limb in the three-dimensional workspace on the second day of training and after 13 weeks was able to perform multidimensional movements [30]. The interpretation of these observations was that the implantation of microelectrodes in the motor cortex is a promising principle to improve the quality of life for paralysed patients.

For several years, a developmental project along the same line has been ongoing at the Neuronano Research Center at Lund University in Sweden, with the goal of developing a new generation of extremely thin electrodes using nanotechnology, for permanent implantation in the brain or the spinal cord [31–34]. These electrodes need to be made of a material that is accepted by the brain even in a long-term perspective without inducing any harmful tissue reactions. Still the problem remains how to create a functional brain-machine interface to transmit the recordings from the brain through the skull bone to the computer equipment on the outside of the skull so that the signals can be interpreted and utilised to regulate movements in an artificial hand or handle a computer, a wheelchair or robotic devices.

If the electrodes can be permanently implanted into the brain, it could open a dramatic new landscape for the future for rehabilitation of severely disabled individuals and introduce new types of ethical problems that we have not previously encountered. When a man–machine interface is created, where does the individual end and the machine begin? Who is responsible for involuntary actions that may be performed by a machine or a robotic device linked to the nervous system of a patient via a computerised man–machine interface? And who takes responsibility for the long-term risks that are associated with long-term implantation of needle electrodes in the brain? There are many questions, but so far few answers.

With electrodes in the brain, our wishes and thoughts can be translated into controlled processes in computers, robotic devices and artificial hands. But we are dealing with a principle that makes transmission of signals in both directions possible, even into the brain. Externally generated electrical signals can be used to stimulate specific areas of the brain, a phenomenon that is already used in the treatment of Parkinson’s disease (deep brain stimulation) and chronic pain.

However, the possibility of linking the human brain to a computer also creates a frightening outlook for the future [35]. Although in the long term it may be possible to treat specific psychiatric disorders, it will also be possible to eliminate empathic and inhibiting systems to produce, for instance, effective soldiers. But perhaps it will 1 day be possible to boost fading memory functions or to improve learning and other intellectual functions or to plug in a USB stick containing a dictionary program to make a vacation trip to a foreign country more enjoyable.

Controlling an Artificial Hand Using Electrodes Implanted in a Nerve

Normally hand movements are based on the activation of the motor cortex and electrical signals being transmitted to the hand and forearm via the spinal cord and nerve trunks in the arm. Even after a hand amputation, it is still possible to imagine movements in the hand that no longer exists, thereby activating the cortical motor areas [7]. One attractive concept is to utilise direct recordings of electrical activity in the nerves to control the hand prosthesis. Clinical trials in that direction have been carried out [36–38]. However, this technique is associated with several problems regarding obtaining recordings from appropriate fibre components in the nerve and managing the issue of skin-penetrating wires.

Kevin Warwick at the University of Reading in the UK performed some spectacular clinical experiments in 2003 to demonstrate the potential of direct recordings from peripheral nerves [39]. These trials had great media impact. Warwick underwent a surgical procedure on his own arm, introducing an array containing hundreds of microelectrodes into his own median nerve at the distal forearm level. The median nerve is the major sensory nerve in the hand, which also innervates several of the thumb muscles, so the procedure was far from risk-free.

When Warwick moved his hand, the electrodes recorded the nerve signals generated in the median nerve. The microelectrode array was connected to a computer via skin-penetrating wires, and by using this equipment Warwick was able to control movements in a hand prosthesis and even to control movements of an electrical wheelchair by activating his median nerve.

Intuitively Controlling an Artificial Hand Through EMG Signals: Myoelectric Prostheses

An attractive concept for controlling movements in a mind-controlled hand prosthesis is to utilise the EMG signals elicited by muscle activities in the residual forearm [2, 3]: the hand prostheses currently available on the market, so-called myoelectric prostheses, are all based on this principle. The motor signals from the brain that reach the muscles of the residual forearm have been sorted out along the way to various groups of muscles that normally interact to perform intuitive specific hand movements and grips. Surface electrodes positioned on the skin on top of the extensor and flexor muscles can monitor the contractions and the electrical activity in these muscles, and the generated EMG signals can be used to open and close a prosthetic hand. The principle is simple and ingenious, and this type of prosthesis has been available on the market for several decades. But these prostheses have a very simple function and are often perceived by the amputees as primitive and slow in their actions.

Dr Rolf Soerbye, head of the Department of Neurophysiology in the city of Örebro, Sweden, was a pioneer in the development of a myoelectric hand prosthesis for children. Soerbye used EMG signals – electrical signals generated by contractions in the forearm muscles – to control movements in the hand prosthesis [1]. The simple mechanical construction of the hands allowed for voluntary opening and closing of the prosthetic hand. Soerbye used an effective trick to make the training enjoyable for the children – he let them control small electric toy cars using EMG signals from their forearm. The children were able to make the cars move in various directions by activating the extensor or flexor muscles in their forearms.

Conventional myoelectric prostheses usually use surface electrodes. One electrode, placed on the volar aspect of the forearm, ‘listens to’ and records contractions and electrical activity in the flexor muscles, while the other electrode is positioned dorsally on top of the extensor muscles to record extensor muscle activity. Voluntary activation of the flexor muscles activates an electric motor in the hand prosthesis, allowing it to close, while activating the extensor muscles opens the hand. However, by using more than two recording electrodes, the system can define patterns of muscle signals and associate them with specific movements using pattern-recognition algorithms, with the aim of achieving more complex movements in the artificial hand. This principle was put to use in the early 1990s by a research team at Sahlgrenska Hospital at Gothenburg University, to develop a mind-controlled hand prosthesis, called the SVEN hand. But the hand was too fragile, and the technology was not sufficiently advanced to make long-term daily use possible [40].

After the first clinical trials, further development of hand prostheses was at a standstill for a long time. Although the available myoelectric prostheses can often be very useful, many hand amputees refuse to use them [41, 42]. They often feel that the prostheses are too primitive and too slow in their movements [4, 5]. However, a major reason for the limited use of hand prostheses is the lack of sensibility; there is no sensory feedback from the artificial hand, and as a consequence they are not perceived as a natural part of the body – the brain does not develop a sense of body ownership of the prosthesis [43].

With the evolution of more advanced technology, recent decades have seen prostheses become more user-friendly [6]. In addition, there is an increasing general interest in the development of limb prostheses since the wars in Iraq, Afghanistan and the Middle East have resulted in an increasing number of amputation injuries. In the USA, Canada and the UK, a substantial amount of money is being invested in developing technically advanced hand and arm prostheses, and there are also several ongoing projects within the European Union. The goal is to develop a new generation of mind-controlled artificial hands with improved electronics and new materials capable of performing most of the functions of a normal hand, for instance, gripping a small ball or pencil, picking up and handling a key, pouring water from a bottle, lifting a glass of water and picking up small items from a flat surface. But one determining factor for how well the hand prosthesis can be perceived as belonging to the body is the sensory feedback it gives. Achieving sensory feedback in a prosthetic hand is an enormous challenge that is the subject of several current research projects in the field of prosthetics.

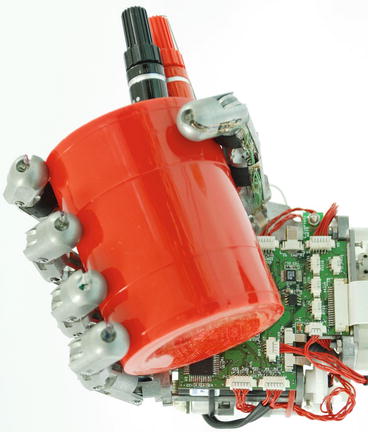

For many years, developmental projects aimed at such ‘intelligent’ myoelectric prostheses have been going on at several of the world’s research centres and laboratories [44–61]. In many of these projects, the concept is to incorporate several miniature electric motors into the hand mechanics aiming at individual mobility in the thumb and fingers. An important part of the construction is that the hand is ‘adaptive’ and automatically adjusts its grip to the shape of the item in question. When such an artificial hand grips a mug, the thumb, index and middle fingers close round the mug first, while the ring and little fingers continue the closing action until they too encircle the mug (Fig. 16.2).

Fig. 16.2

The artificial hand developed in the SmartHand project grips a cup. The hand adapts the grip to the shape of the mug to make the grip effective (Image supplied by Marco Controzzi)

The Austrian company Otto Bock has long dominated the hand prosthetic market and is continuously improving its models. Early models were based on one isolated motor that could open and close the prosthetic hand, but in new models, such as the Michelangelo hand, there are two separate motor units for the fingers and thumb, respectively, making more grip functions possible. The most recent model allows the wrist to be passively placed in several positions. There is currently ongoing research to create motorised wrists capable of rotation and flexion.

Several international companies currently have hopes of developing improved, more advanced hand prostheses. Among several models on the market that allow for various grip functions is the i-Limb prosthesis, developed in Edinburgh and manufactured by Touch Bionics. The i-Limb Hand is made in modules with one separate motor incorporated in each finger, making it simple to replace separate parts of the hand if mechanical problems should occur. The construction allows several grip positions, such as handling a key or picking up small items from a flat surface. Other models with multiple incorporated motors and many useful grip functions are currently under development by companies in the USA, the UK, Germany, Italy and China.

Stay updated, free articles. Join our Telegram channel

Full access? Get Clinical Tree